-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansysは、シミュレーションエンジニアリングソフトウェアを学生に無償で提供することで、未来を拓く学生たちの助けとなることを目指しています。

-

Ansysは、シミュレーションエンジニアリングソフトウェアを学生に無償で提供することで、未来を拓く学生たちの助けとなることを目指しています。

-

Ansysは、シミュレーションエンジニアリングソフトウェアを学生に無償で提供することで、未来を拓く学生たちの助けとなることを目指しています。

ANSYS ADVANTAGE MAGAZINE

DATE: 2019

Scalable Approach to Tackle Increasing Chip Complexity

By Anton Rozen, Director of Backend Design, Mellanox Technologies, Israel,

Increasing design complexity and multiphysics challenges hamper the productivity of system-on-chip (SoC) design teams. Mellanox engineers apply new solutions that leverage big data techniques and flexible computing resources to deliver electronic design automation functionality.

Increasing design complexity and multiphysics challenges hamper the productivity of system-on-chip (SoC) design teams. Engineers want electronic design automation tools that not only reduce runtime but also give them increased flexibility to critically examine and improve their designs. Mellanox engineers apply new solutions that leverage big data techniques and flexible computing resources to deliver this functionality.

High-speed networking is the backbone of connectivity in data centers. Extreme bandwidth and ultra-low-latency networking solutions are critical for the next era of data centers to efficiently process exponentially growing data from emerging AI, 5G and autonomous applications. Companies performing system-on-chip (SoC) designs for networking are challenged as chip size and complexity clash with ever-increasing time-to-market pressures. Grid complexity and the sheer number of gates increase dramatically each year, and network IC teams must design, analyze and tape out chips with dimensions of 400–500 mm or more.

As Mellanox has pushed designs into ultra-deep submicron nodes, the design complexity — and the need for more flexible and scalable design tool solutions — has increased.

Increased cross coupling of various multiphysics effects — including power and thermal reliability — pose significant challenges for FinFET design closure. Multiphysics analysis is critical to overcoming these challenges in order to design extremely large, complex and power-hungry chips, despite narrowing design margins and tighter project schedules.

Faced with this complexity, design teams must have software tools that deliver capacity, flexibility, speed and accuracy.

Mellanox, a leading supplier of end-to-end Ethernet and InfiniBand intelligent interconnect solutions and services for servers, storage and hyper-converged infrastructure, knows these challenges and trade-offs firsthand. The design teams must manage and validate designs by making the most efficient use of computing resources and engineering time. To this end, the team relied on Ansys RedHawk-SC software.

LOOKING FOR VISIBILITY

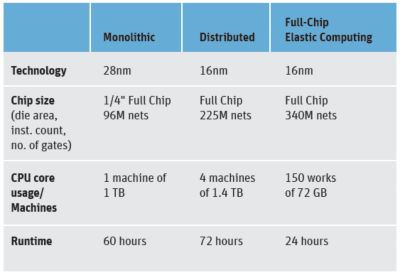

The Mellanox team needed fast turnaround time with pinpoint voltage drop accuracy to ensure power integrity and reliability for their highly complex network processors. But they also sought something that had eluded them in earlier years on other big, high-complexity designs: flexibility and speed of analysis. Because designs have evolved from a little more than 100 million nets at the 45nm node to nearly 350 million nets at 16nm, Mellanox estimates it will need to address nearly 450 million IC nets at 7nm.

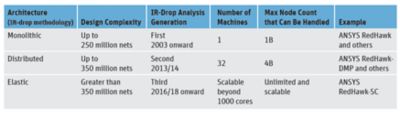

Scalability comparison: How the evolution of software has drastically reduced runtime for increasingly complex SoCs

This type of evolution requires tool capacity to match. A decade ago, in and around the 45nm process node, tool architectures were generally monolithic, and teams were restricted to a single machine that could handle up to 1 billion power and ground nodes at once. (A node is a connection point between any two elements in the power and ground network that are extracted. These elements could be parasitic resistance, inductance or capacitance of the wire or device instance pin connected to the wire. Node count is a metric commonly used in power integrity analysis to predict the design size; it directly impacts the runtime and memory requirements for the analysis.)

In those days, tool capacity was an issue. When conducting multiple analyses for power integrity and reliability signoff, each run (in serial rather than parallel) might take more than 24 hours. This required large servers and considerable resource allocation to complete the analysis. Worse, the system occasionally had trouble managing the complexity and would crash. The analysis then would have to be restarted from scratch.

A second generation emerged to keep up with complexity. This generation leveraged distributed compute, could scale to up to 32 machines and could handle a maximum of 4 billion nodes. This was satisfactory until ICs became even more complex.

SCALING TO BIG DATA REQUIREMENTS

To deliver insights and enable the team to optimize its design, Mellanox needed a flexible, high-capacity solution that would scale for big data mining and analytics. Engineers began using Ansys RedHawk-SC in 2018. RedHawk-SC is the latest SoC power integrity and reliability signoff platform built on Ansys SeaScape — the world's first custom-designed big data architecture for electronic system design and simulation. SeaScape provides per-core scalability, flexible design data access, instantaneous design bring-up

and many other capabilities.

Ansys SeaScape big data elastic compute architecture

One of the keys to success lies in the elastic computing capabilities of RedHawk-SC. Elastic computing helps to process scenarios in parallel (or in serial), depending on the number of CPU cores available.

The SeaScape architecture is central to elastic computing. It rests on a distributed data/file service since data may be scattered around many locations. On top of this sits a distributed data analytics layer based on the MapReduce concept, which is fundamental to all big data analytics. This conceptually splits the data (mapping) into small chunks called shards and farms each shard for analysis. Processing can be distributed to servers as they become available, across as many servers as needed.

THE POWER PROBLEM

The challenge in these types of network processors is total power consumption and power dissipation. Unlike battery-powered designs, the types of designs that Mellanox works with can consume more than 200 W. So, engineers must achieve complete design analysis — accurate incremental power integrity and reliability analysis — while considering high power consumption without sacrificing accuracy or time to results.

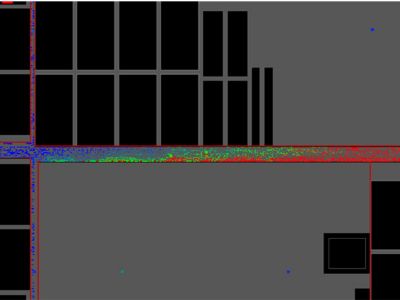

To speed up full-chip IR drop simulations, power grid roll-up methodology can be leveraged to abstract the low- and mid-level metals of the power and ground network. Such abstraction can be used in full-chip simulations. This allows teams to work at the unit level and then jump up to the top level for a comprehensive analysis of the full-chip design

An example of a power integrity simulation using Ansys RedHawk-SC roll-up methodology for abstracting low- and mid-level metal layers of the power grid for fast, incremental full chip analysis.

Doing a full-chip flat run is resource-intensive and time-consuming. By performing incremental analysis enabled by techniques using big data analytics, designers can create a detailed view of a specific block and abstract everything else. This enables them to perform faster analysis and conduct quicker engineering change order (ECO) fixes more easily with visibility.

Ansys RedHawk-SC, with its elastic computing capabilities and big data–enabled analytics, gave engineers the visibility they needed to overcome some previous challenges. The team particularly appreciated RedHawk-SC's self-sustaining stability to monitor its own jobs and to renew the job if it fails.

The team also leveraged RedHawk-SC's elastic computing and its MapReduce-enabled analytics to gain key insights. MapReduce gives designers a bird's-eye view and zeros in on hotspots very smoothly. It provides powerful capabilities such as bringing up the GUI to view a full chip database in less than two minutes and navigating different areas easily, like Google Maps' functionality.

Additionally, it enables vastly more powerful compute flexibility. With RedHawk-SC's elastic scalability, large chip areas that once required huge computing resources can be broken into very small pieces for analysis. The nature of the architecture lets those elements be distributed through a company's computing resources. In this way, it maximizes hardware resource utilization and optimizes cost.

Scatter Plot for Static Voltage Comparison

さあ、始めましょう

エンジニアリング課題に直面している場合は、当社のチームが支援します。豊富な経験と革新へのコミットメントを持つ当社に、ぜひご連絡ください。協力して、エンジニアリングの障害を成長と成功の機会に変えましょう。ぜひ今すぐお問い合わせください。