-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

-

產品系列

查看所有產品Ansys致力於為當今的學生打下成功的基礎,通過向學生提供免費的模擬工程軟體。

-

ANSYS BLOG

January 14, 2021

Simulation Lets Autonomous Vehicles See Their Way Clear of Bad Weather

Adverse weather conditions such as wind, rain, snow, fog and dust can rapidly appear on the road and dramatically affect how we perceive and drive. If the weather has an impact on our perception of the road, what impact does it have on advanced driver-assistance systems (ADAS) and autonomous vehicles (AV)?

One obstacle to launching a fully autonomous vehicle is achieving 100% confidence in autonomous systems’ data gathering, object detection and decision-making processes. Under every lighting level and weather condition, cameras must reliably “see” pedestrians and other physical objects ― and trigger an appropriate reaction from critical systems such as braking. This is especially challenging in rainy, foggy and snowy conditions, which can confuse visual cameras and can also impact the performance of lidar, radar and other conventional sensor technologies. Indeed, weather conditions and the associated sensor soiling have a direct impact on sensor performance, preventing optimal detection for decision making. The impact can be:

- Decreased visibility and contrast for the camera

- Lidar signal scattering, absorption and attenuation, as well as low-quality data for the perception algorithm and spurious results

- Radar can detect objects in bad weather conditions, but cannot classify them correctly

But how can the needed level of certainty be achieved, when it is virtually impossible to mount a sensor on an autonomous vehicle, then subject it to thousands of miles of road testing ― under every possible atmospheric condition and over every possible terrain? Achieving 100% confidence using road testing alone could take hundreds of years.

Getting Good ADAS Data in Bad Weather

Automotive companies can use physical testing like weather laboratories to create scenarios in a controlled environment and on-road testing to evaluate the impact of weather. A weather laboratory can provide repeatable weather data but does not account for weather intensification by other vehicles and dynamic conditions on the road. On-road testing exposes autonomous systems to real weather conditions, but it cannot be relied on for rapid technology development.

An effective alternative to physical testing is coupling computational fluid dynamics (CFD) and optical simulation solutions. Those solutions can help self-driving car manufacturers rapidly develop weather-aware autonomous systems by providing a physics-based approach for different weather scenarios and performance testing for sensors and autonomous system within a virtual environment.

CFD-optical solutions provide an effective way of designing and optimizing hardware like sensors, along with the embedded software that controls these sensors. Because simulation can be done very early in the design process, it can save time by detecting problems that are harder to solve later, when most of the design has been completed.

In CFD-optical solutions, Ansys Fluent is used for performing CFD simulations of various weather conditions including wind, rain, fog, snow and dust. Additionally, weather induced sensor soiling, droplet impingement and transition to film flows, fogging and surface condensation, frosting, icing and deicing phenomena can also be analyzed using Fluent. The result of CFD simulations is high-fidelity, reproducible weather data generation for optical simulations.

CFD can also help analysts in assessing the weather’s impact on optical sensors and improving their design, performance, packaging and placement on the vehicle. Companies can study sensor layout virtually, searching for the most efficient sensor mix to improve the performance of autonomous vehicle sensors in adverse weather conditions. They can also use simulation to review and develop coating and cleaning systems for enhanced sensor perception; to perform reliability tests of perception algorithms; and to run edge case analyses.

Let’s take a deeper dive into two examples here: rain and fog.

Let it Rain on Camera and Lidar Systems

Let’s say, for example, you want to assess camera and lidar performance under a moderate rainfall. CFD simulation enables you to simulate primary rain as well as water droplets generated by other sources such as side splash from the tires, or splashes and droplets generated by other vehicles on the road. After performing CFD simulation of rainy conditions, the position and size of the droplets are then exported from Fluent and imported into Speos to generate the water droplets in the scene via the 3D texture feature so that its impact can be tested on a camera sensor and associated perception algorithm.

Below are the camera images from optical simulations with CFD generated rain data.

Clear, light rain and moderate rain conditions (L-R)

In a light rain, the front car and pedestrian are detected with nearly the same confidence level as in clear sky conditions. The main degradation in detection is of the left car. Confidence level is decreased from 0.87 in clear sky conditions to 0.47 in light rain conditions. In a moderate rain, front car detection confidence level is decreased from 0.98 to 0.87 while the side car and the pedestrian are not reliably detected (confidence levels of those two objects are below confidence threshold of 0.2).

Engineers can also evaluate the impact of the depth of field using the advanced camera model in Speos. Depth of field causes nearby objects to be blurry in camera images, which makes it difficult for perception algorithms to detect them. Weather intensity combined with depth of field is a challenge for perception algorithms.

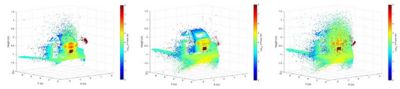

Rain has an impact on lidar results. Rain droplets could cause scattered and missing points in lidar point clouds. Density, size and distribution of the droplets all play important roles in the lidar performance. Different lidar post-processing algorithms, such as using the last returns rather than peak returns, could be applied to mitigate the impacts.

Droplets, which are on the enclosure and closer to the lidar emitter, deviate the light much more than the droplets in the air.

From left to right: light rain, moderate rain (no droplets on enclosure), moderate rain (droplets on enclosure)

To learn more about impact of weather conditions on camera and lidar, view the on-demand webinar: Weather Simulation for Virtual Sensor Testing

Clear Up Foggy Conditions for Self-Driving Car Sensors

While current sensor technologies – including optical cameras and lidars, have fallen short under foggy conditions – there is a positive development: thermal imaging. Because they can sense far infrared radiation, thermal sensors reveal contrasts in temperature, which can be valuable in detecting people, animals and other heat-producing objects in fog and other visually obscure conditions.

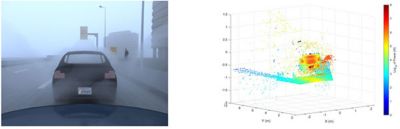

On a virtual driving scene recreated in Speos, realistic simulations were performed with thermal camera and varying degrees of fog. The results showed a vast improvement in perception when a thermal camera is used in foggy conditions, compared to a visible (RGB) camera. This demonstrates that adding thermal imaging to the traditional sensor mix improves the performance of autonomous vehicle sensors in foggy conditions.

CFD-optical solutions can be used to create foggy conditions and test the performance of sensor and autonomous system under those conditions. Homogeneous as well as non-homogeneous (varying in space and time) foggy conditions can be simulated using Fluent. Fog data from CFD is transferred to Speos to test visible camera, thermal camera and lidar performance under those foggy conditions. The following images show non-homogeneous fog and a vehicle in front of the car on the road as seen by visible camera and lidar in optical simulations.

Left: visible camera, right: lidar

Clear Skies Ahead for ADAS Simulations

Automakers and OEMs can complement their physical weather tests with an expanded focus on simulations via Ansys solutions. Use of simulations in early design stages can help cut time and costs from the commercialization cycle of autonomous systems.

Effective perception, especially under adverse weather conditions, is one of the greatest challenges to achieving 100% reliability for autonomous vehicles today. Coupled CFD-optical solutions can help analysts in addressing the challenges posed by weather conditions by developing end-to-end weather-aware solutions including designing of sensors, optimizing their performance, strategically placing sensors on vehicles, designing sensor cleaning systems, testing perception algorithms and performing functional safety analyses.

This means that AV engineering teams can begin to increase and accelerate their testing and verification activities, to perfect innovative mixed-capability sensor designs that dramatically enhance the vehicle’s perception using Ansys simulations.