-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

ANSYS BLOG

February 25, 2022

Unleashing the Power of Multiple GPUs for CFD Simulations

Computational fluids dynamics (CFD) engineers are keenly interested in accelerating their simulation throughput, whether that’s by automating workflows, upgrading to newer/better methods, or using high-performance computing (HPC).

One topic of particular interest is the use of graphical processing units (GPUs) for CFD. This is not a new concept, but there are many ways to achieve it — some more complete than others. For instance, in recent years, the CFD community has seen a number of particle-based CFD methods utilize GPUs.

In the continuum Navier-Stokes realm, the concept of using GPUs as CFD accelerators has been around for years; take, for example, the NVIDIA AmgX solver, which has been available in Ansys Fluent since 2014. The local acceleration you get is extremely problem dependent, and in the end, the portion of the code not optimized for GPUs will throttle your overall speedup. Unleashing the full potential of GPUs for CFD requires that the entire code run resident on the GPU(s).

Ansys has been a trailblazer in the use of GPU technology for simulation, and this year we are taking it to a whole new level with the introduction of the Ansys multi-GPU solver in Fluent.

Let’s take look at the benefits of running CFD simulations on GPUs and when you should consider leveraging that option for your simulations.

Why use GPUs for CFD Simulations?

In recent years, we have seen quantum leaps in the development of GPU hardware and dedicated programming languages that help developers architect CFD solvers equivalent to those designed for traditional CPUs, but with their modules ported on GPUs.

The main benefits of using GPUs for CFD simulation are:

- Increased performance. As our benchmarks show, a single GPU can offer the same performance as more than 400 CPUs. And if that sounds great, it’s mind-blowing how that gets better running on multiple GPUs: six GPUs can be as powerful as more than 2000 CPUs!

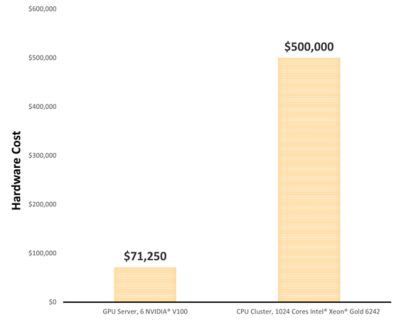

- Reduced hardware costs. The cost of a GPU server is significantly less than the cost of a CPU cluster of equivalent performance. That opens the door to the democratization of high-performance computing for CFD simulations!

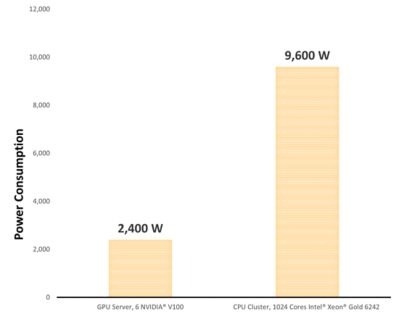

- Reduced power consumption. The cost of electricity is also a significant aspect for many companies running simulations on HPC clusters. GPUs help in reducing that cost significantly, thus increasing their simulation return on investment (ROI) when all costs are factored in.

CPUs vs GPUs: Comparing CFD Simulation Performance

Curious about the performance of the new multi-GPU solver? Watch the video below to hear what NVIDIA said during GTC 2021 in November.

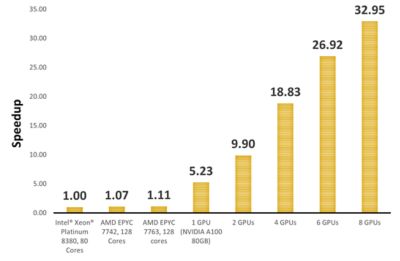

Let’s take a deep dive into an automotive external aerodynamic benchmark. We have seen that a single NVIDIA A100 GPU achieved more than 5 times greater performance than a cluster with 80 cores Intel ® Xeon® Platinum 8380.

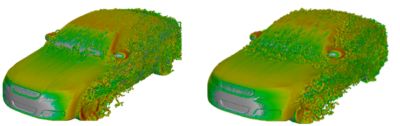

Figure 1: Automotive aerodynamics on CPU solver (left) and GPU solver (right)

Figure 2: Speedup for different CPU clusters and GPU server configurations

1 NVIDIA A100 GPU > 400 Intel® Xeon® Platinum 8380 Cores

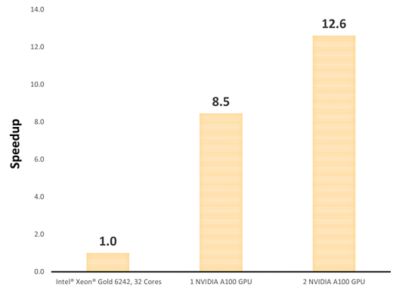

Likewise, on an aerospace external aerodynamic benchmark, we have seen how a single NVIDIA A100 GPU acheived more than 8.5 times greater performance than a cluster with 32 Intel® Xeon ® Gold 6242 cores.

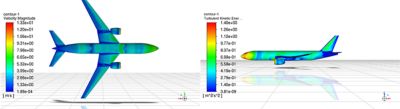

Figure 3: Aerospace aerodynamics on GPU solver

Figure 4: Speedup for different configurations of CPU clusters and GPU servers

1 NVIDIA A100 GPU ≈ 272 Intel® Xeon® Gold 6242 Cores

How can GPUs Reduce Hardware Costs?

During these benchmarks, we not only evaluated the performance of GPUs and CPUs, but we also considered other aspects that are important when making a hardware purchase decision. We looked at the hardware costs, comparing the cost of a GPU server with that of an HPC cluster with equivalent performance.

Figure 5: Hardware cost for a CPU cluster and equivalent GPU server

The study proved that, given a target performance, engineers who run their CFD simulation on GPUs have hardware costs up to 7x cheaper.

Up to 7x cheaper hardware purchase costs

This result is important, not only for companies that already leverage HPC to accelerate their simulation throughput, but also for companies that cannot afford the cost of an HPC cluster and are currently compromising on their time to market.

The ability to run on cheaper hardware with a better performance-to-price ratio will enable engineers to level up their simulation power while remaining within the total cost of ownership they have budgeted.

Companies can pay back their software investment in a few years, thanks to the hardware cost savings realized from the adoption of GPUs for CFD simulation.

How can GPUs Reduce Power Consumption?

Another important aspect for companies making hardware purchase decisions is the power needed to maintain and operate an HPC cluster.

We looked at the power consumption of a CPU cluster with 1024 Intel® Xeon® Gold 6242 cores and noted a power consumption of 9600 W. When we looked at the power consumption of a 6 x NVIDIA® V100 GPU server providing the same performance, that power consumption was reduced to 2400 W.

The results from our benchmarks proved that companies that opt for a 6 x NVIDIA® V100 GPU server can reduce their power consumption by four times compared to an equivalent HPC cluster.

Figure 6: Power consumption for a CPU cluster and an equivalent GPU server

4x lower power consumption

Unveiling the new Ansys Multi-GPU Solver in Fluent

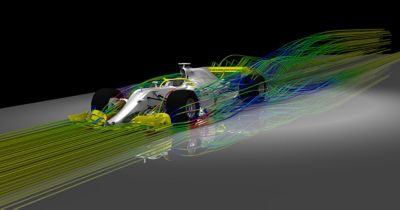

With Ansys 2022 R1, customers can leverage a new multi-GPU solver in Fluent on one or multiple GPUs for incompressible and compressible flows, and steady-state or transient simulations. This new solver has been architected from the ground up to run natively on GPUs and exploit their full potential. This is fundamentally different from GPU acceleration of isolated software modules provided by other simulation vendors.

This new solver supports all mesh types (e.g., poly, hex, tet, pyramid, prism, hanging node) and is available for all subsonic compressible flows with constant material properties. It also supports common turbulence models as well as solid conduction, conjugate heat transfer, moving walls, and porous media.

Figure 7: Formula 1 aerodynamics using the Ansys Fluent multi-GPU solver

Are you dealing with external aerodynamic, internal flows, or heat transfer simulations? If so, you may want to learn more about how you can benefit from GPU-enabled simulations today.

As you can guess, this is only the beginning of our journey for GPU-enabled CFD simulations. Ansys plans to extend support for additional capabilities and industrial applications in future releases, working in collaboration with our GPU hardware partners.

If you want to learn more about the Ansys Multi-GPU solver, check out our Ansys Fluent 2022 R1 release update, or reach out to your Ansys representative for additional information.