-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

-

产品组合

查看所有产品Ansys致力于通过向学生提供免费的仿真工程软件来助力他们获得成功。

-

ANSYS BLOG

May 9, 2023

Ansys-Onsemi Collaboration Leads to Greater Vehicle Perception Down the Road

Simulation enables accurate forecasting and validation of sensor functionality across driving environments.

It may be difficult to envision a world in which we give up control and let our vehicles do the driving. But, in fact, we are getting a glimpse of what that might look like now. Many vehicles on the road today already benefit from advanced driver assistance systems (ADAS) that use sensors such as camera, radar, and lidar technologies to avoid collisions with obstacles, help us stay in our driving lane, parallel park, and more.

All of these systems are guided by artifical intelligence (AI) sensing — such as computer vision, which is a core function of the perception stack in an autonomous vehicle (AV). An AV stack is made up of layers of components responsible for the sensing, connectivity, processing, analysis, and decision-making necessary for self-driving. Much like the human driver, a vehicle’s perception stack “looks around," collects data from vehicle sensors, and processes it to understand and respond to its immediate driving environment.

As two key players in the AV industry, Ansys and onsemi are advancing this mission by collaborating to deliver a unique solution that not only complements each other’s technology, but also enables an ecosystem for original equipment manufacturers (OEMs) and Tier 1 suppliers to use in advancing their development and perception validation goals.

As a leader in power and sensing technologies, onsemi’s objective is to foster innovation and develop intelligent technologies that solve complex customer challenges, including those around vehicle perception in support of AV development. Collaborating with Ansys enables onsemi to create system-level simulations to analyze the effect of its development decisions and advance its technology. The onsemi team has created a sensor model and a virtual twin, then used Ansys AVxcelerate simulation to integrate various driving scenarios, thus converting the sensor model to a broader system level simulation.

“The value of onsemi’s sensor model is enhanced when we are able to forecast and authenticate the sensor's functionality across different situations. Ansys' simulation tool plays a crucial role in achieving this, thanks to its physics-based approach in generating scenes that offers the accuracy we require,” says Shaheen Amanullah, Director of Imaging Systems in onsemi’s Intelligent Sensing Group. “Using simulation, we establish a foundation that enables us to showcase, assess, and develop next-gen sensors that meet the evolving demands of our customers. Moreover, this platform will aid us in gauging the influence on both human and machine vision applications.”

Simulation Establishes a Real-World Correlation to Sensor Performance

The value proposition presented in this scenario is the ability to establish a real-world correlation using a model that is very close to the performance of the actual sensor. This output can be used by OEMs and Tier 1 suppliers to assess their own system designs with confidence. AVxcelerate Sensors provides a physics-based, multispectral light propagation in a dynamic scenario for onsemi’s sensor model to quickly and accurately test and validate its performance — without the need for physical prototypes.

AVxcelerate Sensors enables onsemi to perform comprehensive validation across various scenarios during the definition and design phases of products. An OEM can pick up the onsemi model validated in AVxcelerate Sensors, do their perception algorithm testing, and immediately identify areas of improvement that lead to further sensor optimization through iterative changes — thus improving sensor development in the pipeline. This activity also enables the discovery of performance boundaries of the perception algorithms and provides a head start in training them.

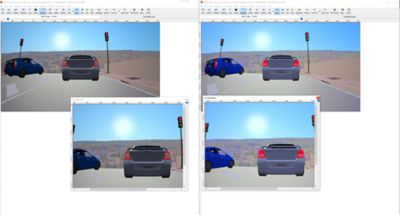

Joint Imager Demo Supports Automotive Camera Testing

From May 9-11, a joint imager demo will be showcased at onsemi’s booth at AutoSens Detroit 2023. In this demo, using AVxcelerate Sensors, Ansys and onsemi have created a fundamental yet pivotal testing scenario for validating automotive camera performance in an ADAS/AV context. The onsemi imager model, fed by information coming out of AVxcelerate Sensors, is simulated using this fundamental scenario to better understand the impact of the sensor design on an ADAS/AV system. All the simulation output is then cross-checked against video recorded by the physical sensor.

Ability to retain flickering lights on Hyperlux (right) family. No artifacts observed as on previous generation (left) of sensors.

To provide reliable solutions to its customers, the goal of the onsemi team is to achieve a high degree of correlation between actual and simulated sensors on image quality and behavior of the sensor. Key performance indicators (KPIs) are implemented to quantify the accuracy of the resulting simulation data. In this scenario, scene generation is a critical aspect of the experiment, as it plays a vital role in achieving an accurate correlation with an actual sensor.

Ansys and onsemi worked toward reproducing, as accurately as possible, the benchmark scenes — a concerted effort that enabled both to improve their tools and better align on critical requirements. This use of virtual simulation will also help OEMs and Tier 1s to engage with sensor manufacturers at an earlier stage, facilitating the definition of next-generation sensors based on simulation-backed data that can better meet their requirements. Additionally, they can apply their perception stack on the simulation output and train their network much earlier in the process when compared to current practices.

“Presently, such activities take place after the sensor has been sampled, leaving a small time-to-market window that limits achievable outcomes,” says Amanullah. “By gaining a head start, OEMs and Tier 1s will have more time to optimize their algorithms and train their networks, resulting in a shorter and smoother validation phase following the sampling process. Our intention is to enhance the current simulation methods and integrate additional features in the future, using AVxcelerate Sensors to provide a more accurate representation of a physical sensor in diverse scenes for our customers.”