-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

ANSYS BLOG

October 2, 2023

Teledyne FLIR Thermal Cameras Now Operational in Ansys AVxcelerate Sensors Suite

Safety and security are critical for introducing autonomous vehicles to society. Any measure that enhances the level of safety is a much-welcome addition and a step further in the world of autonomy. The introduction of thermal cameras in the automotive industry has certainly proved to be a game changer, as thermal cameras have the potential to revolutionize the way autonomous vehicles perceive their surroundings. Essentially, thermal cameras fill gaps in perception and add additional redundancy for cameras, radar, and lidar.

Perception and fusion software play a vital role in the safety of autonomous vehicles by helping to decipher the surroundings and aid with object detection. With the Ansys 2023 R2 release, the AVxcelerate Sensors suite now enables perception engineers to simulate various Teledyne FLIR thermal camera models. By using Ansys simulation and Teledyne FLIR thermal camera models, a much higher level of safety can be quickly achieved, leading to enhanced perception in future autonomous vehicles and advanced driver-assistance systems (ADAS).

Leveraging the environment's and assets' thermal properties, the camera simulation pipeline now provides longwave infrared (LWIR) raw data. AVxcelerate simulates the thermal imager's output, including PSF (point spread function), motion blur and temperature, thus allowing the conversion of photon information into a temperature map. This conversion uses the Teledyne FLIR algorithm, the same one in the actual camera hardware.

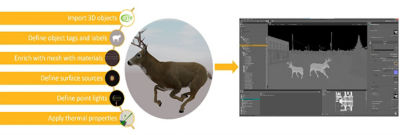

Figure 1. Enrich a 3D world with thermal properties to run accurate physics-based simulations

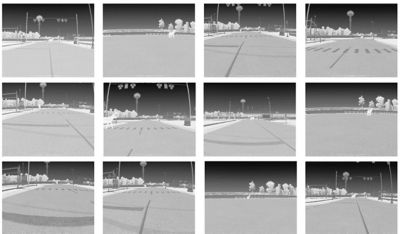

Thanks to the collaboration with Teledyne FLIR, AVxcelerate Sensors users can now access automotive thermal cameras, including FLIR image signal processing to convert temperatures into thermal images that perception algorithms can process. Developers can now simulate a set of FLIR thermal cameras. They can calibrate virtual thermal cameras with the same parameters as the actual camera using the AVxcelerate Thermal Camera Editor and integrate them into their sensor layouts. Thermal camera output data consists of one grayscale image illustrating the temperature relationship between the objects in the scene. Optionally, users can also generate a temperature map, particularly suited for debugging purposes.

Figure 2. Virtual dataset of Teledyne FLIR thermal camera images generated by Ansys AVxcelerate Sensor model

What are Thermal Cameras?

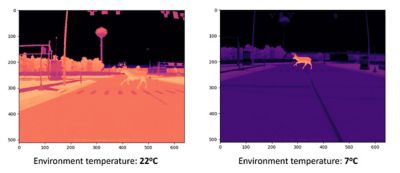

Thermal cameras are specialized imaging devices that detect the infrared radiation emitted or reflected by all objects. They receive and interpret the infrared radiation and output a map correlated to the intensity of the infrared radiation of the scene. This unique methodology enables the cameras to create a differentiated map of the scene segmented by the heat that does not rely on light. This is exceptionally beneficial in low-light, darkness, and poor-visibility conditions — such as adverse or foggy weather. Furthermore, thermal cameras can detect pedestrians and living animals on the road at night at distances multiple times farther than headlights illuminate.

Figure 3. Impact of environmental temperature on living animal detection

Comparison of Sensors

While traditional cameras are useful, they rely on visible light to capture high-resolution images with color and texture. These cameras have high resolution and accuracy, but only perform well in well-illuminated situations, e.g., clear weather, sunny situations, or under streetlights.

On the other hand, thermal cameras operate in the far-infrared spectrum, which falls outside the range of visible light, meaning the sun or streetlight does not impact detection. This feature enables them to capture heat signatures and visualize objects based on their thermal emission or reflection, regardless of ambient light conditions. They can see and perceive objects even in complete darkness, glare, or conditions with poor visibility, such as fog or snow.

Lidar is an active sensor that uses laser beams to illuminate the surrounding environment. Measuring the time of flight creates 3D maps of the objects, facilitating object detection and distance measurements. While lidar can work in low light conditions, it is challenged in adverse and foggy weather conditions because it is sensitive to highly reflective surfaces.

Radar sensors use radio waves to detect objects and are less affected by inclement weather conditions. Radar is affordable and valuable because it can provide the distance to — and rough size of — an object, but is often limited due to its low resolution. It cannot differentiate between objects with similar properties, e.g., between a person and a mailbox.

Importance of Physics-Based Sensors in Autonomous Vehicles

All sensor modalities have strengths and weaknesses, which is why a combination of sensors is always the preferred method to achieve the required level of perception. AVxcelerate physics-based sensors offer high fidelity outputs, are based on factual spectral properties of light propagation from visible to long-wave infrared, and provide the real-world performance critical to achieving the utmost perception and ultimately enhanced safety for all.