-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

-

Ansys는 학생들에게 시뮬레이션 엔지니어링 소프트웨어를 무료로 제공함으로써 오늘날의 학생들의 성장을 지속적으로 지원하고 있습니다.

ANSYS BLOG

November 11, 2022

How Simulation Drives the Top Automotive Trends: Autonomous Vehicles

We’re still many years away from ordering up an autonomous vehicle to drive us around town — and most of us aren’t in a hurry to relinquish the steering wheel. In a recent survey conducted by the American Automobile Association, a majority of drivers favored improvements to driver assist systems already in place over the development of fully autonomous experiences. In fact, when asked, 85% said they were fearful or unsure of self-driving technology.1

However, original equipment manufacturers (OEMs) continue to advance autonomous vehicle (AV) technologies to further the benefits of advanced driver assistance systems (ADAS), and those that will be derived from more autonomy further down the road. Unaffected by driver input, AVs offer transformative safety opportunities for all vehicle occupants (and the pedestrians they encounter during their travels). They also have the potential to make transportation more accessible and equitable for everyone, improve air quality, and, through thoughtful urban planning, reduce traffic congestion and create greener spaces.

Achieving Vehicle Autonomy is a Safer Bet with Simulation

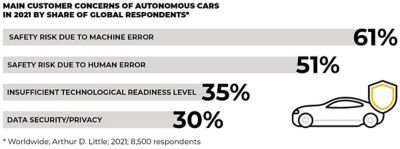

Of course, the biggest assumption around autonomous driving is that by removing the human factor behind the wheel, we will remove the source of the majority of traffic accidents. While this is true to an extent, safety is also predicated on the fact that the driverless technology itself is functioning as it should. Ensuring the technological safety of these autonomous systems requires extensive testing and validation via simulation.

“Essentially all the human factors you can think of that negatively influence driving would be reduced by the adoption of autonomous driving,” says Gilles Gallee, business developer and subject matter expert on autonomous vehicles at Ansys. “So, to put these risk factors behind us, we must be able to confidently state that autonomous driving is safer than a human driver before we can fully embrace self-driving vehicles. As we advance through various levels of autonomy, the key question for original equipment manufacturers is how to make the technology safer, and how to demonstrate that an AV is, in fact, safer than the human driver to the appropriate authorities.”

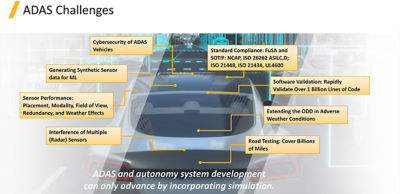

In the case of autonomous technology, OEMs cannot replicate safety performance through testing alone. The methodology nodes — or body of rules, methods, and procedures — needed to gain approvals are all based on International Organization for Standardization (ISO) standards. In this case, an ISO certification is most important because it describes the methodology needed to make an adequate demonstration of safety.

This means OEMs must use safety of the intended functionality (SOTIF) scenarios that apply a certain methodology of testing to show the safety of their systems. SOTIF (ISO PAS/21448) was specifically developed to address autonomous and semi-autonomous vehicle safety challenges faced by vehicle software developers. Simulation is the only cost effective and time efficient way — outside of an unfeasible amount of physical testing — to demonstrate AV system safety.

“Functional safety is about mitigating risks due to system failures like software, hardware, and sensor failures,” says Gallee. “In addition to functional safety, SOTIF focuses on proper situational awareness in order to be safe.”

The reference point for functional safety in the Ansys ecosystem is Ansys medini analyze safety analysis software. Medini analyze delivers the model-based systematic methodology and approach necessary to support successful safety analysis in this domain.

To overcome the huge effort required for validation, Ansys also provides a simulation toolchain that runs in parallel to medini analyze. This toolchain combines simulation at scale with statistics and scenario analysis. It includes Ansys AVxcelerate Sensors, which enables virtual sensor simulation for testing of perception and behavior of sensors in real-world scenarios, and Ansys optiSLang, which provides efficient reliability analysis and scenario-based validation. To demonstrate these capabilities, last December, Gallee’s team proposed Mercedes use optiSlang in its successful demonstration of safety to German authorities — helping to make the Mercedes Benz 2022 S-Class the first L3 autonomous vehicle on the market worldwide.

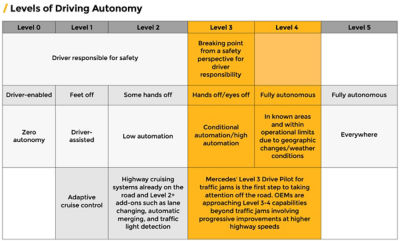

The columns highlighted in yellow note the extent of original equipment manufacturers' progress in reaching full autonomy. Currently they are developing solutions somewhere between Levels 3 and 4; however, they will continue to make big plays in Levels 2 and 3. At Level 3, the onus for safety begins to shift from the driver to the OEM.

In terms of priorities, safety is undoubtedly the most important topic on the minds of OEMs, particularly to address the first batch of L3 level autonomous vehicles, which are a significant technological shift for the automotive industry. L3 is a major milestone because it represents the first automated function where OEMs agree to assume full responsibility for the vehicle operation. Also, OEMs could potentially be held liable for an accident when a system is fully engaged — hence, marking a significant shift in the responsibilities of automotive manufacturers.

“Mercedes’ successful foray into L3 autonomy is really a blueprint for the market,” Gallee says. “Similarly, we have also partnered with BMW to implement a toolchain that will bring the ability to demonstrate autonomous vehicle safety ahead of the actual safety analysis. In the near future, other OEMs will undoubtedly follow the methodologies behind these achievements, especially those involving simulation.”

Sensing the Not-So-Obvious with Behavior Prediction and Object Detection

Two incredibly big plays in autonomy are behavior prediction and 3D object detection. Both involve sensors and perception software. These features aim to reduce accidents by detecting pedestrian behavior around a moving vehicle and in-vehicle driver fatigue or negligence. To successfully implement these features, advanced recognition technologies enhanced by artificial intelligence (AI) are almost a necessity.

In the current climate, when we refer to L3 autonomy, we're essentially pointing to autonomous vehicle engagement at a maximum speed of approximately 40mph (60kmh). Speeds over 40mph will inherently increase risk, resulting in an increased requirement in the accuracy, as well as an enhanced focus on the predictive technologies like detection of pedestrians, animals, and any obstacle.

“While the application of L3 autonomy is a milestone for the industry, it is still quite limited compared to future advances. One reason why it’s limited is because it’s linked to sensing technology,” says Gallee. “Sensors today are not accurate enough or reliable enough to accommodate advanced prediction, so the next generation technology for them involves more optimized performance at much higher speeds.”

To support this initiative, OEMs and automotive suppliers are improving sensing technology accuracy in terms of overall performance. Regulatory authorities are also anticipating these developments in preparation for a driver monitoring system, which puts a lot of pressure on sensing technology, AI-based machine learning, and camera development. How much accuracy will be needed and what level of sensor performance we will need to achieve it remains to be seen.

To ensure the safety and long-term reliability of AVs, OEMs will need to evaluate the sensing technology at an early stage. This will ultimately require more sensor and scenario simulations to help advance their testing and development objectives. Ansys’ unique capabilities to simulate all sensor modalities, including thermal cameras, will play an important role in their development objectives.

Reaching the ‘No-Tipping’ Point with Driverless Delivery

Looking to the future, the first step in the progression of fully autonomous technology on public roadways will be on-demand mobility services, including “robotaxi” delivery services, also referred to as last-mile delivery. Typically, this application involves delivery of goods and services from nearby stores via a driverless fleet — all operating at L4 or L5 autonomy in urban settings through a ride-sharing company. Delivery would be enabled by a combination of advanced AI algorithms and hyper-speed teleoperations for human assistance in certain situations.

“The challenge here comes with the ability and the reliability of mapping and satellite coverage in urban areas, which leads to inaccurate positioning on the road mostly due to buildings and other points of interference,” says Gallee. “A lot of customer discussions we have center on this topic, and what our long-term digital mission should be to help them.”

To advance this mission, a few questions remain to be answered:

- How can we ensure this mission is successful in more complex road environments?

- What happens when the delivery vehicle has to go through a tunnel or into an underground parking facility with no antenna, no GPS, or no satellite to guide it?

This level of complexity will require much higher levels of simulation along with the related AI function. Driverless goods and mobility solutions provider EasyMile, enabled by Ansys simulation software, is already operating on this premise.

Paving the Way for Greater Autonomy with Ansys

Accelerating autonomous driving requires intelligence gathering on multiple fronts, including radar, lidar, sensor, and other AI-enhanced software technologies. Seamless communication across wide-open digital environments is also a requirement. Today OEMs and other mobility solutions providers are turning to Ansys simulation software to level up their driverless technologies and to achieve safety compliance faster. Ansys provides a well-rounded solution stack to aid these initiatives.

- Ansys AVxcelerate Sensors readily integrates the simulation of ground-truth sensors including camera, radar, thermal camera and lidar to virtually assess complex ADAS systems and autonomous vehicles.

- Ansys HFSS is a 3D electromagnetic (EM) simulation software for designing and simulating high-frequency electronic products such as antennas, antenna arrays, RF or microwave components, high-speed interconnects, filters, connectors, IC packages, and printed circuit boards.

- Ansys medini analyze is an end-to-end model-based safety and cybersecurity solution to accelerate autonomous system development and certification.

- Ansys optiSlang process integration and design optimization software automates key aspects of the robust design optimization process.

- Ansys SCADE, a model-based software engineering environment for embedded software design and verification, is critical to electrified powertrain integration, battery management system, and vehicle electrical system applications.

- Ansys Speos optical design software features capabilities for comprehensive autonomous vehicle sensor simulation that includes lidar, radar, and camera design and development.

These products are just a sampling of our broader portfolio geared toward autonomous vehicle development.

Stay tuned. Our next blog will touch on multiple topics — vehicle connectivity, artificial intelligence, and additive manufacturing — where we’ll talk about the importance of simulation technology on multiple industry fronts, including related Ansys tools.

References

- AAA Survey Reaffirms Public Skepticism Over Self-Driving Tech, IoT World Today, May 20th, 2022.