-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Kontakt -

Karriere -

Studierende und Akademiker*innen -

Für die Vereinigten Staaten und Kanada

+1 844,462 6797

ANSYS BLOG

February 24, 2022

How Do Self-Driving Cars “See” in Snow?

To navigate safely, autonomous cars first have to “see” the world around them. Through an integrated system of camera, lidar, and radar sensors, self-driving vehicles are constantly scanning the environment to make informed decisions. But what happens when autonomous sensors are obstructed by snow?

While rain and fog are also challenging weather conditions for self-driving vehicles, the random pattern of snowfall, properties of each flake, and the various distance between flakes, makes snow particularly difficult to maneuver in. If sticky, cold, chaotic snow gets between the sensors and an obstacle, the car’s ability to react appropriately can be greatly compromised.

According to the U.S. Federal Highway Administration, nearly 70% of the U.S. population lives in snowy regions. While California has ideal weather for autonomous vehicles, the reality is the complications of driving in snow have to be overcome before self-driving cars can be relevant to the majority of Americans.

![A snowflake generated with 3D texture in Ansys Speos to analyze its optical properties.]](https://images.ansys.com/is/image/ansys/ansys-speos-snow-conditions)

A snowflake generated with 3D texture in Ansys Speos to analyze its optical properties.

Why is Snow so Difficult for Autonomous Sensors?

Snow can cause a confidence slip in the perception algorithm as it tries to process the information from the sensor’s signal. The result is a failure to detect approaching objects, or to falsely detect objects that aren’t actually there.

Challenges for autonomous sensors in the snow include:

- The lack of contrast between snow and other objects causes objects to go undetected

- Snow scattering can cause object location, distance, or angle to be wrongly identified

- Input from multiple sensors must agree on what they “see,” but snow presents different issues for each sensor type, which jeopardizes consensus

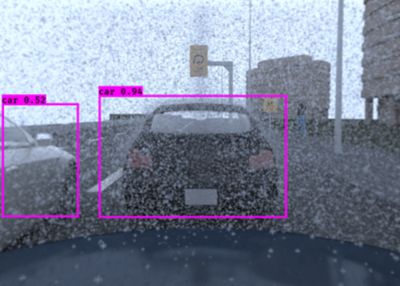

Snow decreases the detection of the side car due to the decrease in contrast.

What Happens to Sensor Accuracy in Snow?

Different sensors have different shortfalls against snow:

Camera: Decreased visibility and contrast

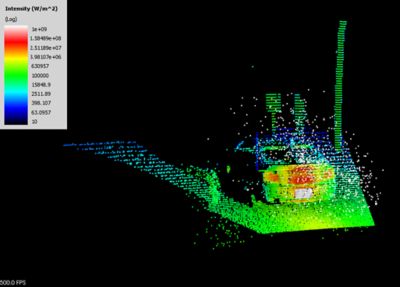

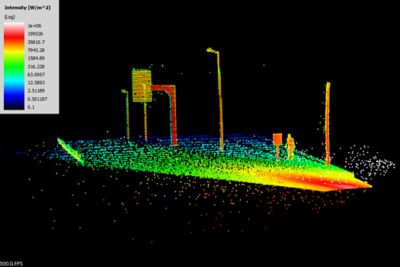

Lidar: Signal scattering, absorption and attenuation, low-quality data for the perception algorithm and spurious results

Radar: Can detect objects, but cannot classify them correctly

Weatherproofing the Self-Driving Car: How Engineers Can Test New Solutions

To build autonomous vehicles that can handle the unpredictability of snow, engineers have to test their designs against countless driving scenarios. For testing, they have a few options:

Weather laboratories: These climate-controlled facilities provide repeatable weather data, but do not account for weather intensification by other vehicles and dynamic conditions on the road.

On-road testing: Driving cars on secure stretches of road exposes autonomous systems to real weather conditions, but it cannot be relied on for rapid technology development.

Simulation: Digital testing creates limitless models with real-world accuracy, reducing the time and cost of physical prototyping, and doesn't require the cooperation of Mother Nature.

How Does Simulation Help Autonomous Cars see Better in Snow?

Simulation provides engineers with a better way to test and improve autonomous vehicle behavior in bad weather by allowing them to model limitless weather and scenario variables. With simulation, there’s no need to wait for snowy weather. Because results are available nearly instantly, self-driving car manufacturers can develop weather-aware autonomous systems more rapidly.

Simulation is highly adept at accurately predicting outcomes in harsh environments, such as outer space and the deep sea, but snow presents unique and complex challenges. For simulation to accurately reflect the impact of snow, the model must take into account each snowflake’s shape, size, position and optical properties (i.e., is the water clear or does it contain impurities). In addition, it must consider the position and shape of the snowy area on the driving surface.

Long-distance, physics-based sensor simulations of lidar (left) and cameras in the snow (right).

Ansys Fluent is used to perform computational fluid dynamics (CFD) simulations of snow. Additionally, weather-induced sensor soiling, droplet impingement and transition to film flows, fogging and surface condensation, frosting, icing and deicing phenomena can also be analyzed using Fluent. The resulting high-fidelity, reproducible weather data generated by CFD simulations can be exported to Ansys Speos for both camera and lidar simulations.

By using Fluent in coupled CFD-optical solutions, AV engineering teams can accelerate their testing to confidently integrate mixed-capability sensor designs and dramatically enhance their vehicle’s perception in all weather conditions — even snow.

To learn more about how simulation can accelerate the development and testing of autonomous cars, read about autonomous sensor development and request a trial of Speos.