Quick Specs

Enrich your sensor perception test cases and coverage with accurate real-time synthetic data. Set up multi-sensors simulation in SiL or HiL context toguarantee your ADAS/AV system performance under any operating condition.

Ansys unterstützt die nächste Generation von Ingenieur*innen

Studenten erhalten kostenlosen Zugang zu erstklassiger Simulationssoftware.

Gestalten Sie Ihre Zukunft

Stellen Sie eine Verbindung mit Ansys her, um zu erfahren, wie Simulation Ihren nächsten Durchbruch vorantreiben kann.

Studenten erhalten kostenlosen Zugang zu erstklassiger Simulationssoftware.

Stellen Sie eine Verbindung mit Ansys her, um zu erfahren, wie Simulation Ihren nächsten Durchbruch vorantreiben kann.

Ansys AVxcelerate provides accurate sensor simulation capabilities, enabling you to test your autonomous systems, including sensor perception, faster than relying only on actual driving or recorded data.

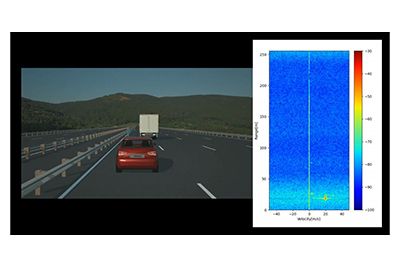

Realistic Driving Scenarios using the Driving Simulator of Your Choice

Ansys AVxcelerate Sensors provides physically accurate sensor simulation for autonomous system testing with sensor perception. Save testing time and cost while increasing perception performances for the camera, LiDAR, radar, and thermal camera sensors. Leveraging AVxcelerate's real time capabilities, perform virtual testing in Software-in-the-loop or Hardware-in-the-loop context following the progress of your design cycles.

Enrich your sensor perception test cases and coverage with accurate real-time synthetic data. Set up multi-sensors simulation in SiL or HiL context toguarantee your ADAS/AV system performance under any operating condition.

July 2025

The 2025 R2 AVxcelerate Sensors upgrade enhances ADAS and AV technology with next-generation cameras, radar for improved accuracy, low-latency RDMA for real-time High-Level (HiL) simulation, and customizable visibility.

The accelerated image processing engine and direct DLL output to the CPU reduce latency, boosting performance by 2.5 times and improving the 8MP camera frame time by 10 milliseconds. Visibility distance is adjustable for daylight fog, with precise particle definition (water, smog, dust) for more accurate environmental testing.

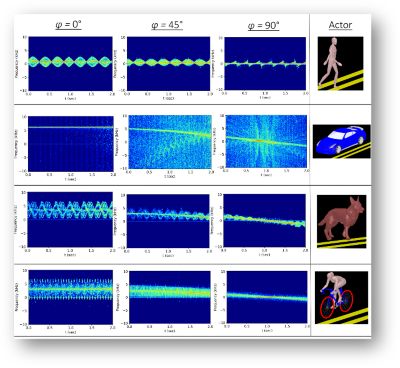

The Phase Noise Model for FMCW radar introduces nonlinear noise effects, enhancing simulation accuracy by avoiding unrealistic perfection. AVxcelerate Sensors now simulate radar filters to prevent aliasing and ensure accurate target detection within the correct frequency bandwidth.

This demo video shows how ANSYS solutions for autonomous vehicles (AVs) can work together to provide a complete solution for designing and simulating AVs, including lidar and sensors, functional safety and safety-critical embedded code — all simulated through virtual reality to reduce physical road testing requirements.

Our camera sensor technology is critical to the work we are doing in supporting autonomous function for our customers. Using Ansys AVxcelerate Sensors during ADAS/ADtesting and validation, we were able to confidently test real-life scenarios thatwere previously off-limitsto us with simulation, withcomplete confidence in theaccuracy of our results. Even though the work to develop a well-roundedsolution is still ongoing, the collaboration between. Ansys AVxcelerate Sensors and Continental camera sensor solutions is already delivering promising results.”

— Dr. Martin Punke Head of Camera Product Technology / Continental

Enrich your sensor perception test cases and coverage with accurate real-time synthetic data. Set up multi-sensors simulation in SiL or HiL context to guarantee your ADAS/AV system performance under any operating condition.

To achieve a high level of accuracy, advanced driver assistance systems/ autonomous driving (ADAS/AD) technology requires Continental to target its camera sensors for simulation. Continental engineers do real-world driving on test tracks or roads to train, test, and validate ADAS or AD systems. They also do component-level testing and simulation; however, only limited engineering simulation solutions are available to tackle this problem. Even though the effort to develop a well-rounded solution is ongoing, the collaboration between Ansys AVxcelerate Sensors and Continental camera sensor solutions delivers promising results.

This demo video shows how ANSYS solutions for autonomous vehicles (AVs) can work together to provide a complete solution for designing and simulating AVs, including lidar and sensors, functional safety and safety-critical embedded code — all simulated through virtual reality to reduce physical road testing requirements.

Ansys AVxcelerate provides physically accurate sensor simulation for autonomous system testing with sensor perception in the loop. Save on testing time and cost while increasing perception performances for camera, lidar, radar and thermal sensors.

Benefit from powerful ray-tracing capabilities to recreate sensor behavior and easily retrieve sensor outputs through a dedicated interface.

AVXCELERATE SENSOR RESOURCES & EVENTS

Join us to hear about new capabilities and features in our 2023 R2 release of Ansys AV Simulation (AVxcelerate). With this release, we introduce improvements in Camera, Thermal Camera & Radar models and the simulation ecosystem to allow users to perform more accurate and much higher fidelity simulations.

For a sustainable business model solution, an intensive trade-off between performance and safety is made in the development of AD systems. Learn how Ansys solutions address critical technical challenges in areas such as sensor and HMI development and system validation.

In-cabin sensing system requirements are increasingly becoming an essential part of governments policies and car safety rating organizations. Learn in-cabin sensing systems requirements andwatch physics-based sensor simulation for in-cabin monitoring systems development andvalidation process.

This demo video shows how ANSYS solutions for autonomous vehicles (AVs) can work together to provide a complete solution for designing and simulating AVs, including lidar and sensors, functional safety and safety-critical embedded code — all simulated through virtual reality to reduce physical road testing requirements.

White Paper

It's vital to Ansys that all users, including those with disabilities, can access our products. As such, we endeavor to follow accessibility requirements based on the US Access Board (Section 508), Web Content Accessibility Guidelines (WCAG), and the current format of the Voluntary Product Accessibility Template (VPAT).

Wenn Sie mit technischen Herausforderungen konfrontiert sind, ist unser Team für Sie da. Mit unserer langjährigen Erfahrung und unserem Engagement für Innovation laden wir Sie ein, sich an uns zu wenden. Lassen Sie uns zusammenarbeiten, um Ihre technischen Hindernisse in Chancen für Wachstum und Erfolg zu verwandeln. Kontaktieren Sie uns noch heute, um das Gespräch zu beginnen.