-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Ansys stellt Studierenden auf dem Weg zum Erfolg die Simulationssoftware kostenlos zur Verfügung.

-

Kontakt -

Karriere -

Studierende und Akademiker*innen -

Für die Vereinigten Staaten und Kanada

+1 844,462 6797

Ansys Blog

December 13, 2018

Top 3 Challenges to Produce Level 5 Autonomous Vehicles

When will we get self-driving cars? Today, but not in the way you think.

SAE International classifies autonomous vehicles on a scale of Level 1 to Level 5 autonomy.

Arguably, we are currently on the cusp of Level 3. A car at this level has limited autonomous features, such as:

- Advanced driver assistance systems (ADAS).

- Lane-keep-assist (LKA).

- Traffic-jam-assist (TJA).

We are far from seeing fully

autonomous cars on the road.

But cars do have a level of

autonomy — Level 3 or slightly

lower to be precise.

These vehicles can make informed decisions for themselves, such as a TJA system deciding to overtake slower moving cars. However, humans still need to pay attention to the road as they may be required to take control when the vehicle is unable to execute the task at hand.

So, will we eventually be able to buy fully autonomous vehicles (Level 5 autonomy)?

“Yes, but it will take time,” says Rajeev Rajan, fellow thought leader and senior director of solutions at Ansys. “Getting from Level 3 to Level 5 is an enormous jump because there is no driver to act as a safety net. The car is literally on its own and must be able to react to all situations that might arise.”

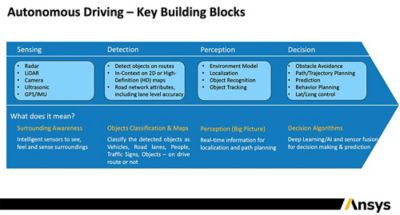

The building blocks of a fully autonomous vehicle.

To operate without a driver, a Level 5 autonomous car must see its surroundings, perceive obstacles and finally act safely based on its perceptions.

Seeing, perceiving and acting — on par with humans or better — are three big obstacles to the implementation of Level 5 autonomous vehicles.

Current State of Autonomous Driving

So, what options do you have to ride in a self-driving car given the current autonomous driving market?

One of the most advanced options is the Audi A8. It has been called a Level 3 autonomous vehicle by many in the industry thanks to its TJA system. However, its Level 3 status is being debated because questions about legality, insurance and road sign variation have blocked the TJA system from the North American market.

For now, the closest to Level 5 autonomous vehicles in North America is Waymo’s robo-taxi service, which can claim Level 3 or maybe approach Level 4. A Level 4 vehicle only requires human control when the vehicle indicates that it needs a driver to intervene. Unfortunately, Waymo’s system is still experiencing delays.

Considering the difficulties companies are having bringing a Level 3 autonomous car to market, how do we get to Level 5 autonomous cars?

Level 5 Autonomous Vehicles Need Better Sensors

For Level 5 autonomous cars to work we need to understand that no sensor is perfect. These cars will require a variety of sensors to properly see the world around them.

For instance, radar can’t make out complex shapes, but it’s great at looking through fog and rain. Lidar is better at capturing an object’s shape, but it is short-ranged and affected by weather.

Level 5 autonomous cars will be

able to sense their environment

using multiple sensors.

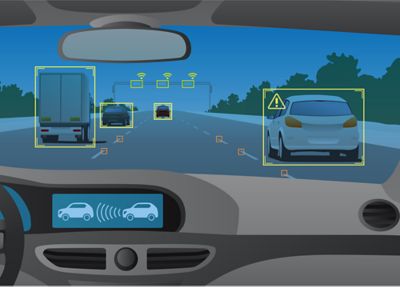

Autonomous vehicles use multiple sensors to act as backups to each other. “The information provided by the sensors overlaps and that’s good. They correlate information and make the car safer,” says Rajan.

It’s when sensors don’t agree, or when the environment makes them ineffective, that humans must grab the wheel again.

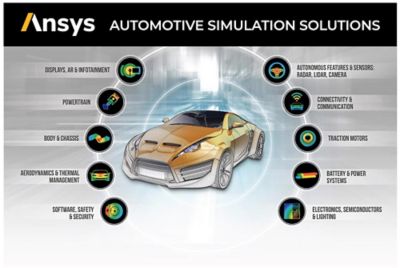

Ansys plays a big role in simulating the physics associated with sensors. Engineers need this data to optimize sensor designs. For instance, Ansys solvers can simulate how fog affects lidar so engineers can design more effective lidar sensors.

To learn how Ansys is helping engineers get sensors ready for Level 5 autonomy, watch Weather Simulation for Virtual Sensor Testing or come visit Ansys at CES.

Level Five Autonomous Vehicles Need Perceptive Artificial Intelligence

“You can’t simply put sensors on a car and expect it to recognize its surroundings,” notes Rajan.

The sensors create a large influx of data; Level 5 autonomous cars need to understand and perceive this data as objects in the environment. This needs to be done in real time — even a second of latency can create an unsafe situation.

Level five autonomous vehicles

need to perceive their surroundings.

The only feasible way to process the volume of raw data from sensors is with machine learning and artificial intelligence (AI).

The AI system will need to be trained to recognize objects on a road. Training an AI to recognize stationary objects that look similar, like stop signs, is relatively straight forward. Training an AI to recognize a person running across a highway is a little more complicated.

Additionally, setting up this scenario on the road is expensive and a safety concern. The AI system still needs to see this scenario numerous times to learn how to perceive it. Therefore, training the AI for this scenario in the real world isn’t feasible.

This is where Ansys simulation comes into play. It helps engineers set up AI training scenarios in a fast, safe and cost-effective environment.

Level 5 Autonomous Vehicles Need Safe Decision Making

Once the autonomous vehicle perceives its surroundings, it then needs to make decisions. Every possible decision needs to be validated to ensure safety.

Ansys offers various tools engineers can use to ensure an

autonomous car is safe for the public.

The challenge is that the surroundings that influence the autonomous vehicle’s decisions are governed by thousands of parameters, such as:

- Traffic conditions

- Pedestrian conditions

- Weather conditions

When vehicle-to-everything (V2X) communication is established, a car will also need to consider information being communicated by other autonomous vehicles and smart infrastructure in the area.

Ensuring the decisions made by the self-driving car are safe is the hardest part of reaching Level 5 autonomy. To ensure safety, it is essential to test, redesign and validate decision making software algorithms over billions of driving scenarios — even the unlikely ones.

It’s impossible to safely test all these scenarios in the real world within a reasonable time. The only way to ensure every condition is tested is to set them up in a virtual world. Ansys has the capabilities to set up and test these scenarios with the vehicle’s hardware in the loop.

To learn how a simulation tests an autonomous vehicle’s decision making, watch the video below or visit Ansys at CES.