In part 1 and part 2 of this blog series, we discussed speedup enhancements for several use cases, including automotive external aerodynamics, canonical laminar flows, conjugate heat transfer, and more when using the Ansys Fluent GPU solver.

In part 3, we will showcase the latest use cases available in 2024 across different GPU hardware options and model complexity, including the massive speedup and energy savings that you can expect when utilizing GPUs for your computational fluid dynamics (CFD) simulations.

Download the latest version of the Ansys Fluent GPU solver on the Ansys Customer Portal.

New Use Cases Available With the Latest Version of the Fluent GPU Solver

Since the Fluent GPU solver launched in 2023, we have continued to introduce new capabilities at each software release. In 2024, we introduced the following capabilities:

- AMD GPU hardware support

- Nonconformal interfaces and sliding mesh

- Detailed and stiff chemistry solvers

- Discrete phase model (DPM)

- Incompressible and compressible solvers

- Far-field boundary conditions

- Acoustics: Ffowcs Williams-Hawkings method

These new capabilities will open the door to new use cases, allowing users to perform advanced combustion, external aerodynamics, and more at exponentially faster solve speeds and lower power consumption costs, but with the same degree of accuracy as the traditional Fluent solver.

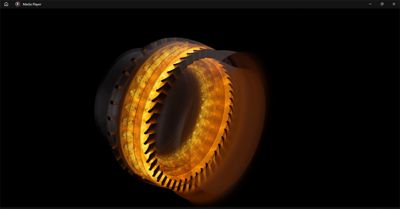

Solve a Full-wheel Multistage Compressor in Less Than Eight Hours

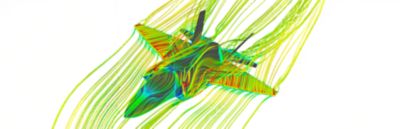

With GPU hardware, engineers can now run much more complex simulations at massively larger cell counts and exponentially faster speeds. In the use case in Figure 1, we ran a 3.5-stage full-wheel 360-degree compressor with a mesh size of approximately 500 million cells. The case ran on 12 NVIDIA A100 Tensor Core GPUs and utilized the new sliding mesh capability available in version 2024 R1 with enhanced large eddy simulation (LES) numerics.

Figure 1. Simulation of a full-wheel 360-degree compressor using the Ansys Fluent GPU solver

Running a full wheel compressor simulation used to be a hero calculation that you might consider running a couple of times per year, requiring solve times of weeks or months. Now, utilizing the parallel performance of GPU hardware and the latest capabilities available in the Fluent GPU solver, you can get results within the working hours of a single day.

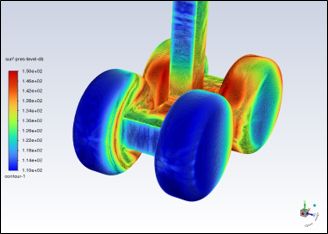

Speed Up External Aerodynamics Studies of Aircraft Landing Gear by 10.5X

In this use case, we utilized a rudimentary landing gear (RLG) model that’s been well known for over a decade, presented at the first and second Workshops on Benchmark Problems for Airframe Noise Computations (BANC-I and BACN-II) in June 2010. This use case is significant because the turbulent flow over the struts and wheels produces drag, as well as noise. Despite the relatively simple geometry, the turbulent flow has significant separation and a developing wake structure, both of which are challenging to properly capture through simulation.

Figure 2. Upwind turbulent flow simulation

Figure 3. Downwind turbulent flow simulation

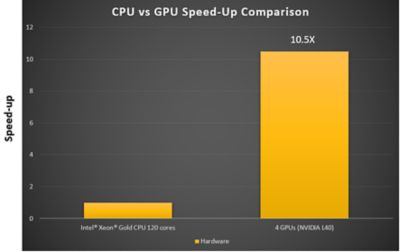

The total mesh size for this simulation was 14.4 million polyhedral cells, ran as transient and utilizing fully scale-resolving large eddy simulation (LES). This simulation ran on the Fluent GPU solver with four NVIDIA L40 GPUs, resulting in 10.5 times faster solve times versus the CPU configuration, which ran on 120 Intel® Xeon® Gold CPU cores.

Figure 4. Four NVIDIA L40 GPUs are 10.5 times faster than running the same study on a CPU hardware configuration.

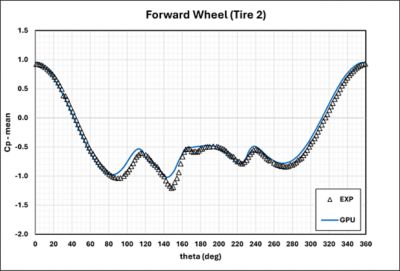

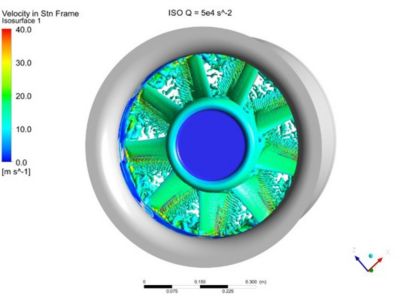

We also saw excellent agreement with experimental data for this use case. The mean pressure coefficients were calculated on the midline of two tires. We see that GPU results are in excellent agreement with experimental data.

Figure 5. GPU results match extremely closely with experimental data for both the forward tire (left) and rear wheel tire (right).

Solve a 24 Million-cell Aeroacoustics Model in Less Than 12 Hours

Simulating aeroacoustics can be extremely complex and costly, as there can be multiple noise sources that are triggered by mechanisms with many interaction levels. Because of the inherent unsteady nature of acoustic noise generation and propagation, it can take these types of simulations days or weeks to run on traditional parallel CPU computing hardware. However, utilizing Fluent software’s native GPU solver can reduce these times by up to an order of magnitude or more, depending on the type and number of GPUs used.

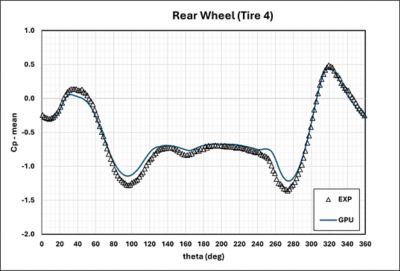

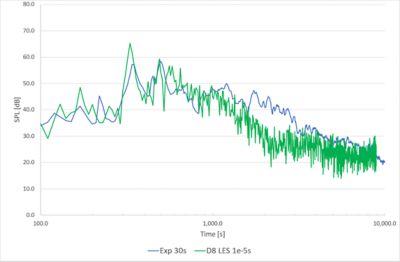

In the study depicted in Figures 6 and 7, we ran an aero-acoustic simulation of a low-pressure axial fan on the Fluent GPU solver using the well-known Ffowcs Williams-Hawkings (FW-H) model. With a total cell count of 24.2 million, this case ran on eight NVIDIA L40 GPUs in around 11 hours (~1.5 hours per revolution of the fan rotor with seven to eight revolutions required).

Figure 6 illustrates the vortex structures emanating from the rotor due to turbulent separation. This turbulence, along with the pressure fluctuations from the moving blades, gives rise to the characteristic fan tonal and broadband noise signatures. We also saw excellent agreement with experimental data, as shown in Figure 7.

Figure 6. Aeroacoustic simulation of a low-pressure axial fan

Figure 7. This graph shows excellent agreement between computational fluid dynamics (CFD) results and experimental data.

Running this same simulation on a baseline CPU system of 120 cores usually takes multiple days to complete. Leveraging GPUs to run these studies will enable enhanced design space exploration for increased optimization and efficiency.

What About Solver Accuracy? Fluent GPU Solver vs. Experiment

Speed improvements for your CFD studies are of clear value, but far less so if your results are not accurate or do not have good agreement with experimental data. Fluent software is well known for its accurate solvers and physics models, and we have performed extensive accuracy validation studies since the Fluent GPU solver’s release in 2022. These validation studies show excellent agreement between GPU results and experimental data.

To access more validation benchmarks, read our 2024 R2 validation studies white paper.

Realize Power, Energy, and Cost Savings With GPUs

The value of GPU speed improvements is clear because it has a net positive impact on design cycle iterations and length, leading to an overall faster time to market. But what about GPU power and energy costs? Ansys has performed studies to understand both the power consumption and hardware cost of GPU versus CPU configurations.

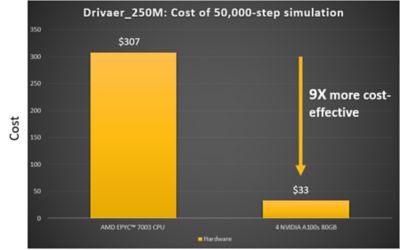

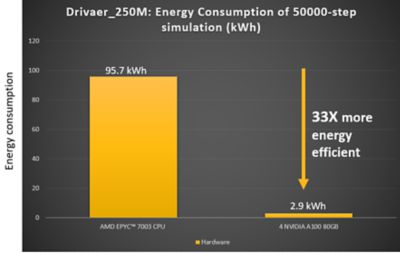

In the chart depicted in Figure 8, we ran a DrivAer 250 million-cell external aerodynamics study with LES at 50,000 time steps on both a CPU configuration and GPU configuration. From our analysis, we see that GPU hardware costs to run this model end up being nine times more cost-effective when running the same model on CPU hardware and 33 times more energy efficient.

Figure 8. Four NVIDIA A100 GPUs are nine times more cost-effective than running the same model on a CPU hardware configuration.

Figure 9. Four NVIDIA A100 GPUs are 33 times more energy-efficient than running the same model on CPU hardware configurations.

The power, energy, and time savings of GPUs are substantial with clear benefits. Our support of both NVIDIA and AMD GPUs helps provide broad access to accelerated computing. Cloud-based HPC solutions can also be a great option. Ansys currently offers the below solutions for cloud and HPC technologies:

And we partner with the following vendors:

We are witnessing a step-change in CFD with the movement toward general purpose GPU computing, and Ansys is leading the way. As GPUs become increasingly available and as more businesses begin to adopt them for faster workflows and lower costs, it’s critical to stay ahead on the cutting edge and invest early. By partnering with Ansys, you can trust in software that is rigorously and continuously benchmarked for speed and accuracy, and which leverages an R&D team dedicated to providing the very best in your CFD software solution.

Ansys is also the first to adopt the NVIDIA Omniverse Blueprint for real-time computer-aided engineering digital twins, a reference workflow of NVIDIA acceleration libraries, artificial intelligence frameworks, and Omniverse technologies that enables real-time, interactive physics visualization in Ansys applications. This will help users achieve 1,200x faster simulations and real-time visualization.

Where Can I Learn More?

New to the Fluent GPU solver? Start with our free learning course available on the Ansys Innovation Space.

Access our Fluent GPU solver documentation page on the Ansys Help site.

Download the Fluent GPU solver validation white paper.

Have additional questions? Access the Fluent GPU solver FAQ page.