-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys si impegna a fare in modo che gli studenti di oggi abbiano successo, fornendogli il software gratuito di simulazione ingegneristica.

-

Ansys si impegna a fare in modo che gli studenti di oggi abbiano successo, fornendogli il software gratuito di simulazione ingegneristica.

-

Ansys si impegna a fare in modo che gli studenti di oggi abbiano successo, fornendogli il software gratuito di simulazione ingegneristica.

-

Contattaci -

Opportunità di lavoro -

Studenti e Accademici -

Per Stati Uniti e Canada

+1 844.462.6797

ANSYS BLOG

September 29, 2022

How Simulation is Creating a Mixed Reality

As the standard bearer for the many benefits of combining physical and virtual worlds, simulation is, not surprisingly, at the heart of the latest advancements in extended reality (XR). That’s the umbrella term for technologies like virtual, augmented, and mixed reality, along with all the wearables, sensors, artificial intelligence (AI), and software those rapidly evolving technologies encompass. That’s a pretty big umbrella, with applications in entertainment, healthcare, employee training, industrial manufacturing, remote work, and more already in use — and many more on the horizon.

Because XR is still being defined, it’s difficult to get a handle on just how big it will become. Some analysts valued the 2021 XR market at $31 billion and expect it to reach $300 billion by 2024.1 If those predictions are even close to reality, there are surely a plethora of new XR products, applications, and experiences in development right now by companies both large and small. But what is needed to enable XR to achieve this potential?

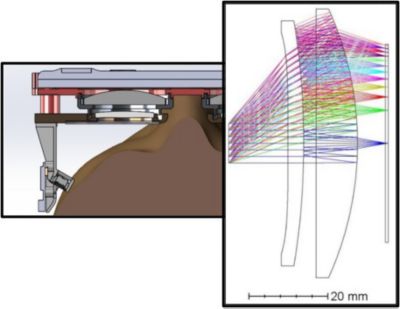

Optics bring extended reality (XR) into focus, but unlike traditional camera lenses, XR employs more compact waveguide and “pancake lens” designs.

Perhaps breaking the term XR down into its individual parts will help; a nice review is provided here. As described in that article, augmented reality (AR) enables you to add a virtual image on top of your view of the real world, while virtual reality (VR) takes you away from the real world entirely and immerses you — to varying degrees — in a virtual world. Mixed reality (MR), as its name implies, is a mix of AR and VR.

What is Mixed Reality?

Mixed reality blends our physical world with digital assets in a way that enables virtual objects to interact with the real world in real time.

Imagine a structural engineer and an electronics engineer standing in the same room, seeing each other and a virtual model of a motor floating in the real world. They can spin it, enlarge it, or look inside the model to see its components like they could on a computer screen. They can also walk around it or place it in the engine compartment of a physical automobile to see how it fits in the actual space, get a better understanding of how it interacts with other system components, and collaborate without losing important verbal and nonverbal communication cues. Of course, not everyone would need to be in the same room, either. If an interactive 3D model of an engine can be projected in the real room, why not a 3D hologram of another engineer 1,000 miles away?

It's easy to imagine similar scenarios in the classroom, the operating room, the factory floor, and beyond. To maximize the benefits of MR requires microphones, cameras, and sensors like accelerometers, infrared detectors, and eye trackers, as well as fast connectivity to data on the cloud. To take advantage of what these technologies can offer, users must wear headsets to experience the full effect.

The operating room is just one use case for mixed reality. It could be used as a more effective training tool or to help guide surgeons.

Make Sure the Optics are Right

To create your desired MR, optical design components and challenges must be addressed first. Headsets need to be compact and lightweight so they are comfortable and even stylish to wear, enabling their usage over many hours each day. The digital images need to be bright enough to view in all lighting conditions, including sunny days. Achieving high resolution and a depth of field (DOF, or the distance between the nearest and furthest points in an image that are in focus) is also a challenge.

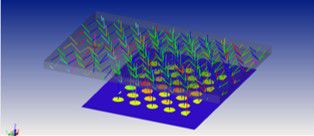

To create the most authentic and visually accurate MR environments, companies are using optical simulation tools such as Ansys Zemax OpticStudio and Ansys Lumerical products to design optical systems. OpticStudio and Lumerical can help you reach and optimize your optical design goals for MR applications by providing a full, integrated solution at both macro and sub-wavelength scales. OpticStudio can simulate the entire system at a macro scale, while Lumerical can simulate the diffractive components that are increasingly being used to both improve performance and reduce the size of these systems, which requires sub-wavelength physics.

Using OpticStudio and Lumerical in tandem allows you to design all the optical components in the device, starting from the light source to the human eye. Capabilities for optimization and tolerance helps ensure your designs are successful and your products are manufacturable. This is all done using models and tools for raytracing, finite-difference time-domain (FDTD), and rigorous coupled-wave analysis (RCWA), backed by a powerful solver.

Examples of using Lumerical and OpticStudio in tandem are illustrated by waveguide designs and “pancake lens” designs that are common in XR applications. In optics, waveguides are true to their name in the sense that they guide waves of light. A pancake design, also referred to as folded optics, brings a lens and display closer together for a more compact design that also minimizes unwanted movement.

An example of a pancake lens design (left) and a waveguide design (above)

To optimize waveguide design, you can simulate the behavior of light through that part of the optical system that uses diffraction gratings, components can be designed to diffract or disperse light into different directions with high efficiency. On a macro scale, you can use OpticStudio to simulate the behavior of light throughout the whole optical system.

To enhance VR pancake designs, you can use Lumerical to simulate the lens’ optical filters, including its curved polarizer and quarter-wave plate, and then simulate the full system in OpticStudio to detect and predict ghost images.

Lumerical and OpticStudio can also be dynamically linked to provide seamless and deep integration. This connected workflow enables fast design iteration and is important for overall optimization and tolerancing of the system. Tolerance analysis examines the impacts of expected manufacturing and assembly errors to ensure all model specifications are balanced appropriately to achieve your desired optical designs.

Simulating Tomorrow’s Reality

To explore how Ansys’ simulation can help you achieve your MR goals by enhancing your optical designs, register for a free trial of OpticStudio or learn more about Lumerical FDTD.

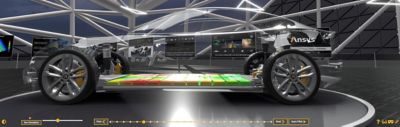

An artist's rendering of a electric vehicle energy storage system in a mixed reality environment.