-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys s'engage à préparer les étudiants d'aujourd'hui à la réussite, en leur fournissant gratuitement un logiciel de simulation.

-

Ansys s'engage à préparer les étudiants d'aujourd'hui à la réussite, en leur fournissant gratuitement un logiciel de simulation.

-

Ansys s'engage à préparer les étudiants d'aujourd'hui à la réussite, en leur fournissant gratuitement un logiciel de simulation.

-

Contactez-nous -

Carrières -

Étudiants et universitaires -

-

S'inscrire -

Déconnexion -

Espace client -

Support -

Communautés partenaires -

Contacter le service commercial

Pour les États-Unis et le Canada

+1 844.462.6797

-

ANSYS BLOG

June 30, 2022

Unleashing the Full Power of GPUs for Ansys Fluent, Part 2

Imagine the time you could save throughout the year if you were able to save a few minutes, hours, or even days on each task. Well, if that task is computational fluid dynamics (CFD) simulation and you want to reduce solve times, the Ansys Fluent GPU solver could provide the solution.

Whether solving a 100,000-cell or a 100 million-cell model, a traditional approach for reducing simulation time is by solving on many CPUs. Another approach that has gained attention in recent years is the use of graphics processing units, or GPUs. This started when some parts of the CPU solution were passed to GPUs to accelerate the overall solution time, which is known as offloading to GPUs.

We implemented this offloading technology in Ansys Fluent back in 2014, but this year we are taking the use of GPU technology to a whole new level with the introduction of a native multi-GPU solver in Fluent. A native implementation provides all the solver features on the GPU and avoids the overhead of exchanging data between CPU and GPU, which results in better speedup when compared to offloading.

Unleashing the full potential of GPUs for CFD requires that the entire code runs resident on the GPU(s).

In part 1 of this blog series, we highlighted a 32X speedup for a large automotive external aerodynamics simulation, but we understand not all users are simulating models that size. In this blog, we will highlight the power of GPUs for smaller models that contain additional physics capabilities, including porous media and conjugate heat transfer (CHT).

Speeding Up CFD Simulations of All Sizes

Ranging from 512,000 cells to over 7 million, the models detailed in this blog all show substantial performance gains when solved on GPUs. And you don’t need the most expensive server-level GPU to realize substantial performance gains, because the Fluent GPU solver can use your laptop or workstation GPU to greatly reduce solution times. Don’t just take our word for it, though — keep reading to see how the native multi-GPU solver resulted in speedups of:

- 8.32X for an air intake system

- 8.6X for a traction inverter

- 15.47X and 11X for two different heat exchanger designs

Air Flow Through a Porous Filter

Air intake systems on automobiles are used to draw air in, passing it through a filter to remove debris and send clean air to the engine. For this 7.1 million-cell simulation, the filter is modeled as a porous media with a viscous resistance of 1e+8 m-2 and inertial resistance of 2,500 m-1. Air is flowing into the intake system at a mass flow rate of 0.08 kg/s.

Streamlines through an air intake system that was sped up by 8.32X when solved on one NVIDIA A100 GPU.

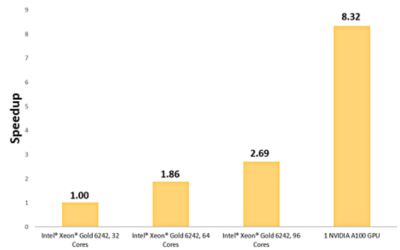

Four different hardware configurations were used to solve this model, three consisting of Intel® Xeon® Gold 6242 cores and one consisting of one NVIDIA A100 Tensor Core GPU.

Using a single NVIDIA A100 GPU resulted in a speedup of 8.3X when compared to solving on 32 Intel® Xeon® Gold cores.

Using a single NVIDIA A100 GPU to simulate airflow through a porous media resulted in a speedup of 8.3X compared to 32 Intel® Xeon® Gold cores.

Thermal Management Using Conjugate Heat Transfer Modeling (CHT)

It is critical to account for thermal effects along with fluid flow in many industrial applications. To accurately capture the thermal behavior of the system, heat transfer in the fluid coupled with heat conduction in the adjacent metal is often important. Our native GPU solver shows massive speedups for these coupled CHT problems.

Three different thermal simulations that include CHT are shown below, a 4 million-cell water-cooled traction inverter, a 1.4 million-cell louvered-fin heat exchanger and a 512,000 cell vertical-mounted heat sink.

Water-Cooled Traction Inverter

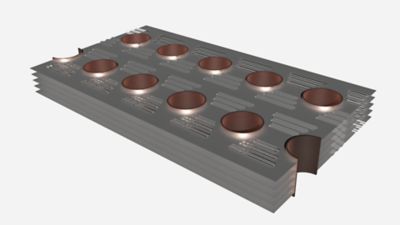

A traction inverter simulation involving CHT that was sped up by 8.6X when solved on one NVIDIA A100 GPU.

Traction inverters take direct current (DC) from a high-voltage battery and convert it to alternating current (AC) to send to an electric motor. Thermal management is critical for traction inverters to ensure both safety and longevity.

The model shown above is a 4 million-cell water-cooled traction inverter that has four insulated gate bipolar transistors (IGBT) with a heat load of 400 W. Cooling is coming from 25° C water circulating through the housing at a rate of 0.5 kg/s, and heat rejection to the surrounding air is modeled using a convective boundary condition.

Solving on one NVIDIA A100 GPU resulted in an 8.6X speedup when compared to 32 Intel® Xeon ® Gold 6242 cores.

Louvered-fin Heat Exchanger

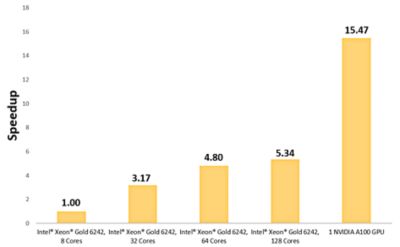

This heat exchanger model uses forced convection through a louvered-fin heat exchanger. The problem consists of air at 20° C flowing through aluminum louvered fins at a velocity of 4 m/s to cool copper tubing.

To establish a baseline, we ran the 1.4 million-cell model on 8 Intel® Xeon® Gold 6242 cores. Running this exact same model on one NVIDIA A100 GPU resulted in a speedup of 15.5X.

Temperature contour on a louvered-fin heat exchanger solved 15.47X faster on one NVIDIA A100.

A single GPU provided a 15.47X simulation solve speedup for a louvered fin heat exchanger.

Vertical Mounted Heat Sink

The final problem consists of a free convection five-finned aluminum heat sink with the base maintained at a constant temperature of 76.85° C and the surrounding air at an ambient temperature of 16.85° C.

Solving this 512,000-cell case on a laptop with one NVIDIA Quadro RTX 5000 GPU resulted in a speedup of 11X when compared to the 6 Intel® Core™ i7-11850H cores on the laptop.

Even when using a single laptop GPU like the NVIDIA Quadro RTX 5000, you can expect huge reductions in solve time with the native multi-GPU solver in Fluent. When using a similar graphics card in a workstation, even greater performance can be expected.

A 512,000-cell heat sink simulation was sped up 11X when solved on one NVIDIA Quadro RTX 5000 GPU.

Revolutionizing CFD Simulations Through GPUs

Fluent users now have the power and flexibility to run on a laptop or workstation with a single GPU or scale up to a multi-GPU server. Leverage the hardware you already own to speed up your CFD simulations more than you ever thought possible.

The native multi-GPU solver in Fluent runs on any NVIDIA card from 2016 or later with CUDA driver 11.0 or newer installed.

Ansys has been a trailblazer in the use of GPU technology for simulation, and with this new solver technology, we are taking it to a whole new level. All solver features in the native GPU solver are built with the same discretization and numerical methods as the Fluent CPU solver, providing users with the accurate results they expect in less time than ever before.