Electric and autonomous vehicles rely on simulation to speed up development and increase safety.

In the race to put more electric vehicles (EVs) and autonomous vehicles (AVs) on the road, reducing time to market can be a huge competitive advantage, meaning automotive engineers don’t have a minute to spare.

While depending on simulation to fast-track the development and optimization cycle and improve product safety is nothing new, yesterday’s single-core computing environment has struggled to keep up with the massive, hardware-intensive computation models required for complicated EV and AV designs.

When it comes to modeling the interaction of vehicle components across millions of scenarios or simulating an advanced driver-assistance system (ADAS), traditional processing methods can be too time-consuming. Because any constraints, whether software- or hardware-related, can distract engineers from the task at hand, Ansys is constantly exploring how to deliver the best cost-performance ratio for different solvers. Partnering with some of the biggest names in automotive software and operating system development, artificial intelligence (AI) computing, and cloud solutions, Ansys has demonstrated how to deliver high-confidence simulations, sometimes as much as 53X faster than before.

Pushing the Boundaries

While both EVs and internal combustion engine (ICE) vehicles rely heavily on software, EVs often push the boundaries of software-defined features.

Consider mission-critical systems like electronic control units (ECUs). Because these devices manage engine operations, safety measures, braking systems, keyless entry, and driver comfort, they must respond to events in real time. That requirement makes task distribution and synchronization important considerations when embedded software engineers develop code.

In addition, ECUs must comply with AUTOSAR, or AUTomotive Open System ARchitecture. AUTOSAR is the global development partnership of automakers and software companies whose purpose is to establish a standardized software architecture for automotive ECUs.

Not only do these computation-heavy challenges require more sophisticated software engineering, but solving them is inherently a slow process. A collaboration between Ansys and AUTOSAR specialist Elektrobit has delivered a new way to streamline ECU development. And it’s so effective that in a real-world test case, it shaved processing time by 60%.

By combining the Ansys SCADE embedded software product collection, which automatically generates code-compliant software, with the Elektrobit tresos Safety operating system (ensuring an AUTOSAR-compliant standard software stack), the companies created an automated process for generating and verifying embedded software cores using multicore environments. This method optimizes the synchronization and sequencing of tasks, produces verified software code, and meets stringent AUTOSAR standards with the lowest possible investment of time, money, and computing resources.

As proof, the Ansys-Elektrobit team carried out a test on a battery management system (BMS). BMSs are crucial for ensuring the safe operation of EVs. They monitor and control parameters, such as cell voltage, temperature, and state of charge (SOC), to prevent thermal runaway, overcharging, and other potential hazards.

They are also notoriously challenging to simulate due to the complex interplay of physical, chemical, and electrical processes.

In the BMS test case, computation on a single processing core resulted in a runtime of 4.64 milliseconds. In a multicore environment, however, the same computation took just 1.9 milliseconds, translating to a 60% reduction in processing time — significant savings for overburdened software engineering teams and a key to faster market launches.

Using HPC Clusters

While the right software and multicore environment can accelerate innovation for embedded software engineers, for many other engineers the answer to faster simulation lies with high-performance computing (HPC) clusters, interconnected computers working together to solve a single problem.

The HPC environment can handle massive amounts of data and perform complex calculations at high speeds, chiefly by distributing tasks across the whole processing array. But even with those advantages, it can still take significant runtime to keep up with automotive use cases. What’s more, HPC clusters are often expensive and quickly outdated. In response, two alternatives have emerged: using graphics processing unit (GPU) computing or having multiple application-specific solvers powered by the perpetually refreshed cloud.

A frontal offset crashworthiness evaluation is a common use of Ansys LS-DYNA nonlinear dynamics structural simulation software.

Using GPUs

GPUs were originally designed for rendering graphics, but their highly parallel architecture and ability to perform thousands of independent calculations simultaneously make them suitable for a wide range of applications. That includes increasing throughput for computational fluid dynamics (CFD) simulations.

Ansys Fluent fluid simulation software is widely used in automotive design to model and analyze fluids phenomena. Fluent CFD is known for its efficient HPC capabilities, scaling to thousands of central processing unit (CPU) cores. However, to determine the optimal computing environment to speed up the solver’s simulations, Ansys recently participated in a benchmark study with NVIDIA and Supermicro on external automotive aerodynamics. What the companies found demonstrates that GPU-based computing can provide substantial performance gains for complex simulations like CFD.

The simulation model involved a very detailed representation of the car’s external shape, with 250 million individual cells used to define the geometry. This level of detail was necessary to accurately capture the complex flow patterns around the car, which can significantly impact its aerodynamic performance.

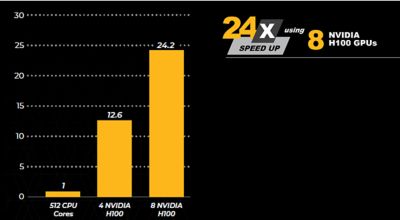

When our HPC partner, MVConcept, ran the large-eddy simulation (a CFD technique used to simulate turbulent flows) using four NVIDIA H100 GPUs, there was a 12.6X improvement in speed compared with 512 CPU cores. Doubling the number of GPUs to eight nearly halved solve time. The linear trend in scale-up from 12.6X to 24.2X suggests that adding more GPUs could continue to yield significant solve time improvements.

Linear scaling means that Ansys Fluent’s 12.6X speed up on four NVIDIA H100 GPUs nearly doubles (24.2X) when using eight NVIDIA H100 GPUs.

Accelerating Solve Time

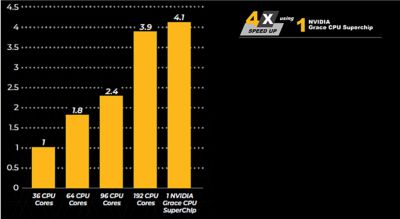

Ansys, NVIDIA, and Supermicro also collaborated on a benchmarking study to assess Ansys LS-DYNA nonlinear dynamics structural simulation software in a Supermicro ARS-121LDNR computing environment equipped with the NVIDIA Grace CPU Superchip.

LS-DYNA software is an explicit simulation application, meaning that it is used mainly to analyze sudden impacts, such as crashes, drops, explosions, and other severe loading events. It generates some of the biggest simulations done today, evaluating hundreds of virtual test scenarios with models composed of millions of elements or nodes. The NVIDIA Grace CPU Superchip is a new type of processor designed for data center-scale computing, offering significant performance improvements over traditional CPUs.

The study involved recreating a virtual frontal car crash to assess safety compliance when a vehicle hits an offset deformable barrier. Leveraging this powerful software and hardware combination resulted in 4X speedup.

With the NVIDIA Grace CPU Superchip, engineers using LS-DYNA software can drastically reduce solve time to evaluate more collision scenarios for improved safety and reduce the time and expenses of physical testing.

Predict at the Speed of AI

Simulation can also be used to increase the speed of AI training. The faster that AI is trained, the faster and more accurate those simulations become. Ansys AI offerings include the Ansys SimAI platform and Ansys AI+ add-on modules for existing simulation products.

Combining AI and simulation provides accelerated prediction of aerodynamic performance across design changes 10-100X faster, even when the geometry structure is inconsistent, by leveraging previous CFD simulations used in earlier design phases or previous car generations.

When simulation software, AI, and HPC are combined, the results are even more impressive. In the benchmarks performed in conjunction with NVIDIA and Supermicro, Ansys optiSLang AI+ optimization software showed an amazing speed boost. By training optiSLang software with simulation data derived from small parametric design studies that may take days to create, multiple AI models using different variables can then be run in minutes or seconds. A baseline result was calculated by finding the time that it would take to generate 80 design points for two 5G mmWave antenna modules simulated with HFSS software on 12 CPU cores and 8 GPUs. When an optiSLang AI+ model was used to calculate how long it would take to generate the same quantity of design points, the benchmark testing showed an astounding 1,600X speed increase.

Meeting the Demands of Complexity

Improving performance is essential, and so is outpacing the competition. But when it comes to in-depth automotive product studies, fixed computing infrastructures can lack sufficient capacity for large simulations, or it takes days, weeks, or even months to achieve high-fidelity results. Upgrades are out there, but having to balance costs with budgetary limits may put these out of reach for many engineering departments.

To optimize runtimes and costs, Ansys has partnered with cloud service provider Amazon Web Services (AWS) to deliver the Ansys Gateway powered by AWS cloud engineering solution, a scalable platform purpose-built for the demands of complex simulations, with plug-and-play simplicity.

With the Ansys Gateway powered by AWS solution, product development teams can easily and efficiently solve large, complex problems in the cloud. Subscribers have flexible, affordable access to the most advanced, state-of-the-art software (including some preconfigured for easy deployment) and hardware, choosing from an array of available processor types, processing cores, and node configurations. The Ansys Gateway powered by AWS solution allows Ansys simulation software to run on thousands of computer processors simultaneously. This significantly speeds up simulations without requiring expensive hardware or increasing costs.

To confirm the effectiveness of the Ansys Gateway powered by AWS solution, Ansys completed two studies using LS-DYNA software. The model for a three-car, front-impact simulation was used and included more than 20 million elements. What Ansys discovered was that when it comes to using the cloud, configuration matters. AWS instance type Amazon EC2 hpc6a.48xlarge delivered faster results at a lower cost compared with the instance types Amazon EC2 c6i.32xlarge and Amazon EC2 c5n.18xlarge. With an optimized cloud configuration, Ansys found that users can achieve up to an 11X acceleration in simulation runtime for less money.

Because Ansys software is tightly integrated with the AWS cloud and runs side by side with other simulation workflow tools in the cloud, engineers can avoid switching back and forth between platforms to complete tasks. So not only does the Ansys Gateway powered by AWS solution shorten solution times — it streamlines job submissions, handoffs, and other process steps.

Efficient, Powerful, and Fast

Accelerating Innovation With GPU Supercomputing

Ansys recently announced another exciting milestone in CFD simulation that was achieved in collaboration with NVIDIA and Texas Advanced Computing Center (TACC). This work involved using the 320 NVIDIA GH200 Grace Hopper Superchip nodes on the Vista supercomputer at TACC to run a highly complex automotive external aerodynamics simulation.

This simulation tackled a 2.4 billion-cell model, achieving extraordinary results, including a 110X speedup over traditional CPU-based approaches and performance equivalent to more than 225,000 CPU cores. This breakthrough cuts simulation time from nearly a month to just over six hours, redefining the potential for overnight high-fidelity CFD analyses and helping set a new standard in the industry.

For those in engineering and product development, this GPU-driven leap forward offers more than just faster results — it opens the door to simulations of unprecedented scale and complexity that can deliver insights previously out of reach.

Engineers recognize that simulation is more efficient than building prototypes. But sophisticated vehicles like EVs and AVs require more computing resources than their predecessors. By drastically reducing solve time, Ansys is giving automotive engineers the ability to evaluate more scenarios for safe driving while shaving the time and expenses of physical testing.

Learn More

Download the e-book “How to Accelerate Ansys Multiphysics Simulation Software With Turnkey Hardware Systems.”