TOPIC DETAILS

- What is an Autonomous Vehicle?

- Advantages and Disadvantages of Autonomous Vehicles

- 5 Levels of Automation in Autonomous Vehicles

- Autonomous Vehicle Sensors

- How are Autonomous Vehicles Designed and Tested?

- Industry-specific Regulations on Autonomous Vehicles

- How Simulation Software Drives Autonomous Vehicle Design

What is an Autonomous Vehicle?

An autonomous vehicle collects, perceives, and analyzes data to make independent decisions and perform actions based on its surroundings. Automation in vehicles is rapidly increasing, and we now have driverless vehicles on the road, as well as vehicles that have some level of autonomy with a human driver.

There are five levels of automation in the automotive sector (and three for aerospace) that range from no automation (Level 1) to fully automated (Level 5). In farming and mining, autonomous vehicles perform tasks without human intervention. In aerospace, 98% of a flight is automated because of autopilot features, but it will be a long time before we see fully autonomous aircraft because of strict regulations.

Level 5 cars don’t exist yet because the artificial intelligence (AI) in autonomous cars cannot currently compete with human drivers, even though it does remove the potential for human error. However, Waymo is pushing closer to the limits with their driverless Level 4 autonomous vehicles. There are also autonomous features from automakers such as Ford and Tesla that are considered Level 2 and Level 3, meaning partial automation and conditional automation, respectively. In the next 10 years, we may see fully autonomous Level 5 self-driving cars on the road.

Designing an autonomous vehicle is more complex than conventional cars (i.e., internal combustion engine or electric vehicles) because the vehicle is designed to have its own “brain” and perform the usual driving tasks while having all required safety features. This creates a legal gray area because if there’s an accident, there is no driver to hold accountable. This makes the design and validation of safety systems more complex, as manufacturers need to ensure that they avoid situations that could lead to legal challenges.

Advantages and Disadvantages of Autonomous Vehicles

Vehicle automation brings these benefits to society:

- Peace of mind when commuting in heavy traffic and the ability to perform other tasks during a long drive

- Fewer crashes, thanks to better predictions from self-driving cars

- Reduced traffic jams from traveling at optimal speeds

- Higher speeds and greater safety on highways due to reduced human error, distraction, and fatigue

- Increased access to transportation for people with disabilities

- A reduction in carbon emissions from lower levels of traffic, with an even greater impact if used in conjunction with electric vehicles (EVs)

- Reduced costs from larger-scale manufacturing efforts to help produce cheaper vehicles and create new economic markets

However, there are also some potential disadvantages:

- Large data requirements for very technologically complex systems, as one small error in the software could lead to accidents

- Expensive production costs because of rigorous testing requirements

- Opportunities for hackers to interfere with cloud-based software

- The need for robust communication networks to handle large amounts of data in real time

5 Levels of Automation in Autonomous Vehicles

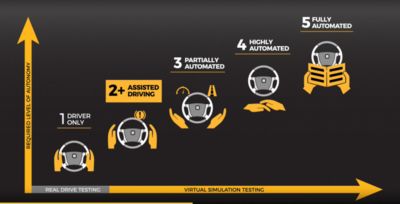

Visual categorization of the levels of automation in vehicles

As previously mentioned, there are five levels of autonomy for cars and three levels for aerospace. Level 1 means there is no autonomous technology while Level 5 is considered a self-driving vehicle with full autonomy. Most automotive autonomous vehicles are at Level 2 or Level 3.

Levels 0-2

Levels 0-2 range from having no automation features to using assisted driving functionalities. In any of these levels, the driver is still fully in control of the vehicle and must be actively engaged at all times. The automation tools in these levels assist the driver with the driving tasks without taking over control.

Levels 3-5

From Level 3 onward, the human driver does not have full responsibility of the vehicle, and the automated driving system monitors the driving environment.

In Level 3, the driver doesn’t control the car unless there’s an emergency, and Levels 4 and 5 are completely driverless. The main difference between Level 4 and Level 5 is that Level 4 vehicles are geofenced and have to work within certain operation conditions, whereas Level 5 vehicles have complete autonomy and can drive anywhere. Level 5 vehicles are also not governed by predetermined conditions.

Examples of Different Autonomous Levels in Society Today

- Uber has cars with Level 2 advanced driver-assistance systems (ADAS) and Level 3 automated driving in California.

- Uber is looking to move up to Level 4 vehicles in the near future by utilizing AI, and it will soon be adopting a robotaxi service from China in countries outside the U.S.

- Self-driving cars are already available in San Francisco, Los Angeles, Phoenix, and Austin through Waymo, but the cars are geofenced to those locations.

Challenges in Moving Toward Level 5 Vehicles

The technology is already available to create Level 5 automated vehicles if the car is on a road with no obstacles. However, the presence of obstacles, construction areas, and people behaving in unpredictable ways makes it difficult to design fully automated vehicles, as do the many types of roads to navigate (e.g., dirt roads that might not look like a traditional road and could confuse the algorithms of the vehicle).

Autonomous Vehicle Sensors

Sensors are the most crucial component of autonomous vehicles and form the fundamental basis of any driver-assistance technology. Sensors collect all the data ready for processing so an autonomous vehicle’s “brain” — which uses data fusion algorithms — can make an informed decision. With larger amounts of varied data, autonomous vehicles can make better decisions. This is why many types of sensors are used on autonomous vehicles.

If we compare an autonomous vehicle to a human, the sensors represent the ears and eyes that spot potential hazards. The brain (representing AI) then interprets the surroundings based on what’s observed. While sensors today are still not as accurate as human senses, many of them can be combined to build a complete picture of the vehicle’s environment.

Here are the key sensors on an autonomous vehicle:

- Camera: Acts as the eyes of the vehicle and sees the world around it

- Lidar: Analyzes distances and determines the distance between the vehicle and an obstacle

- Radar: Measures short-term distances and determines velocity

- Thermal sensor: Is used when cameras aren’t suitable (e.g., in tunnels and dark conditions) by measuring the temperature emitted by people and objects that cannot be seen by the camera.

- Ultrasonic sensor: Placed in the wheels to detect curbs and other cars when parking

Location of sensors on an autonomous vehicle

Sensor fusion algorithms are crucial for ensuring that an autonomous vehicle can navigate effectively. Sensor fusion takes data from each sensor — ranging from the velocity of an object to how far away it is — and pieces everything together to assess the situation.

Sensor fusion also prioritizes different sensors based on the environment. For example, if it’s dark, data from the thermal cameras will take precedence over camera data for decisions.

How are Autonomous Vehicles Designed and Tested?

There are multiple design stages for autonomous vehicles, including component design, system design, and validation. Simulation software is used in all design phases to streamline workflows.

Component design involves optimizing lenses, mechanical barrels, multiple sensors, and the position of the sensors on the vehicle. While the component may be perfect individually, it may not fit in the desired location due to the geometry of the vehicle or perturbation interfering with the sensor operation.

Simulation is used to put the components into different scenarios and weather conditions to ensure that they are effective in the vehicle’s operational ecosystem. The whole design process hinges on validating every stage as soon as possible to reduce time and cost.

Industry-specific Regulations on Autonomous Vehicles

Safety and regulations play a key part in designing components and sensing systems, as they drive the definition of the system’s functional and safety requirements. The difference in regional and industry-related regulations governs the levels of automation that can be adopted — for example, full automation already exists in the mining and farming industries compared to the partial automation features currently available in the automotive and aerospace sectors.

Let’s look at two of the most regulated industries as examples of the constraints facing the industry when designing vehicles with higher autonomy:

- Aerospace: Nothing in aerospace is released without strict validation, and any sensors need to operate over extreme temperature ranges, at high speeds, and in high-vibration environments. While 98% of planes today are automated by autopilot, approval of a plane with no pilots will be difficult because of potential safety issues. Ansys is part of the ARP6983 consortium that seeks to form new regulations that validate autonomy functions for aerospace.

- Automotive: Regulation varies by region. Tesla has cars in the U.S. with full self-driving (FSD) features, but they are currently pending regulatory approval in Europe. BMW has also released their second Level 3 Highway Assistant in Europe.

How Simulation Software Drives Autonomous Vehicle Design

Simulation software offers two distinct advantages:

- It reduces the need for expensive prototyping by enabling virtual testing and development, saving time and resources.

- It is essential for validation and verification (V&V) of AI-driven systems, as these systems can only be validated through probabilistic assessments of failure rates that require extensive simulated testing.

While both these benefits are crucial, they address different aspects of the development process and are not directly linked.

Here are some key examples of how Ansys software is used throughout the design process:

- Ansys Systems Tool Kit software: Provides a physics-based modeling environment for analyzing platforms and payloads in a realistic mission context.

- Ansys medini analyze software: Ensures that vehicles will be safe and meet regulatory requirements.

- Ansys AVxcelerate Autonomy software: Provides a solution designed specifically to support developing, testing, and validating safe automated driving technologies.

- Ansys AVxcelerate Sensors software: Integrates the simulation of sensors like camera, radar, lidar, and thermal camera to virtually assess complex ADAS systems and autonomous vehicles.

- Ansys optiSLang software: Used for scaling different test environments and determining a logical series of test scenarios.

- Ansys Zemax OpticStudio software: Used to optimize lens stacks in the vehicle’s cameras and lidar system.

- Ansys Lumerical FTDT software: Used to design CMOS sensors with the best performance.

- Ansys Speos software: Simulates and optimizes the design and performance of optical systems, including the lens and packaging spaces.

- Ansys HFSS software: 3D electromagnetic simulation software that’s used for designing different high-frequency electronic components used in autonomous vehicles, including radio frequency sensors, lidar, radar and cameras.

Overall, simulation helps to improve the development time and time to market, and different simulation software packages can be combined throughout the process. Ansys is focused on enhancing the development of Level 2 and Level 3 vehicles and is working toward enabling a more thorough design process for Level 4 and Level 5 autonomous vehicles in the future.

Discover the ideal combination of software solutions for your autonomous vehicle from Ansys.