-

United States -

United Kingdom -

India -

France -

Deutschland -

Italia -

日本 -

대한민국 -

中国 -

台灣

-

Ansys is committed to setting today's students up for success, by providing free simulation engineering software to students.

-

Ansys is committed to setting today's students up for success, by providing free simulation engineering software to students.

-

Ansys is committed to setting today's students up for success, by providing free simulation engineering software to students.

-

Contact Us -

Careers -

Students and Academic -

For United States and Canada

+1 844.462.6797

TOPIC DETAILS

What is a Heads-Up Display (HUD) & How Does it Work?

As you drive, there are dozens of distractions fighting for your attention—on the road, but also within the vehicle. The speedometer. Fuel levels. Traffic alerts and driving conditions. Valuable information to improve your driving experience, but to see it, you need to look down or over—away from the road.

What is a Heads-Up Display?

A heads-up display (HUD) is a form of augmented reality that presents information directly in your line of sight so you don’t have to look away to see it. Exactly as the name suggests, it helps drivers keep their eyes on the road – and their heads up.

What Are Applications for Heads-Up Displays?

While driving is the most widely known application for heads-up displays, there are many uses for the technology. Anywhere an operator needs visibility to the real world and digital information at the same time, a HUD can help. Piloted systems, such as aircraft, military vehicles, and heavy machinery, are all ideal use cases. In these situations, information is projected where it can be viewed by the operator without looking away from road, sky, or task at hand.

Another common application for HUDs is video games. Augmented reality headsets use HUD technology to give gamers the ability to see through the game and into their physical environment. When used in this way, they create a mixed reality where game play is overlayed with information about the player’s status, such as health, wayfinding and game statistics.

The global use of telemedicine has also increased the adoption of heads-up displays in healthcare. Giving medical professionals the convenience of hands-free operation, Head-Mounted Displays and Smart Glasses featuring HUD technology can be found in clinical care, education and training, care team collaboration, and even AI-guided surgery.

Types of Heads-Up Displays

Whether you’re a pilot needing to keep your eyes on airplane traffic or a gamer watching out for the edge of the coffee table, there are several types of heads-up displays designed to satisfy specific user requirements. Many factors, such as the environment, cost constraints, and user comfort, all go into choosing the right type of HUD for the application.

But while types of HUDs can vary to serve the industry and use case, most HUD types are made up of the same three components—a light source (such as a LED), a reflector (such as a windshield, combiner, or flat lens), and a magnifying system.

All HUDs have a light source (Picture Generation Unit) and a surface reflecting the image. (Most often this surface is transparent to allow the user to see through it). In between the light source and reflecting surface, there is typically a magnifying optical system. The magnifying systems can be:

- One or several freeform mirror(s) magnifying the image

- A waveguide with gratings magnifying the image

- A magnifying lens (typically in aircraft HUDs)

- Nothing (some HUDs have no magnification)

Benefits of HUDs

Heads-up displays project visual information within a user’s current field of view. This provides several key benefits:

- Increases safety through improved focused and awareness

- Prioritizes and distills the most relevant information at the right time

- Relieves eyestrain caused by constantly changing focus

- Builds trust between autonomous vehicles and riders by showing that the system and human share the same reality

How Does a Heads-Up Display Work?

Put the flashlight from your phone on a window and you’ll see both the light’s reflection and the world beyond the window. A heads-up display achieves a similar experience by reflecting a digital image on a transparent surface. This optical system provides information to the user in four steps.

- Image Creation: The Picture Generation Unit processes data into an image

- Light Projection: A light source then projects the image towards the desired surface

- Magnification: The light is reflected or refracted to magnify the beam

- Optical Combination: The digital image lands on the combiner surface to overlap the real-world view

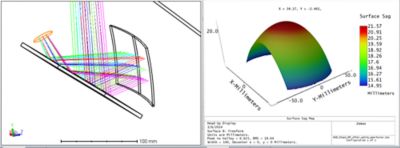

Heads-Up Display Design

Heads-up displays involve human perception, making them very complex to design and test. Engineering metrics help designers meet a specification, but understanding how the human experience affects the process will determine the ultimate success.

To account for the human component, HUD designers use simulation. By digitally testing and validating their models, they can proactively address many scenarios and technical challenges, before or even without costly physical prototypes. These challenges can be:

- Ghost images, warping value, and dynamic distortion

- Differences in physiology such as head position and color blindness

- Color shifts due to coated windshields or polarized glasses

- Contrast, legibility and luminance of projected images

- Sun interfering with readability and vision safety

Example: Simulating Sun Reflection

Using simulation, engineers can predict how the sun’s reflection will affect the legibility of HUD information in different scenarios.

The simulation results below show the sun reflecting in the HUD on the mirrors. The 4 results show the same set-up and sun position with 4 different materials applied on the HUD housing. Notice how, with each new material, the unwanted reflection becomes less noticeable. In the last image, reflections are entirely absorbed by the material.

Engineers and designers can benefit greatly by using optical simulation tools such as Ansys Zemax OpticStudio and Ansys Speos to simulate a system’s optical performance and evaluate the final illumination effect based on human vision.

With these simulation tools, you can:

- Determine visual aspects, reflection, visibility, and information regarding legibility according to the individual human observer

- Simulate visual predictions based on physiological human vision modeling

- Improve visual perceived quality by optimizing colors, contrasts, harmony, light uniformity, and intensity

- Consider ambient lighting conditions, including day and night vision

HUD Raindrop Simulation

In the automotive industry, simulation can also help engineers see how a HUD will be experienced by a driver during different weather scenarios. This video shows how a simulation models the human experience of viewing the HUD in a rain shower.

Focused on the Future

As human performance continues to be data driven, the need to prioritize and deliver information to people in the fastest, safest ways will be at the forefront of innovation. In addition to automotive evolutions, HUD technology has the potential to help humans stay on task across a variety of industries, such as healthcare, infrastructure, and communications.

Related Resources

Let’s Get Started

If you're facing engineering challenges, our team is here to assist. With a wealth of experience and a commitment to innovation, we invite you to reach out to us. Let's collaborate to turn your engineering obstacles into opportunities for growth and success. Contact us today to start the conversation.