-

-

Access Free Student Software

Ansys empowers the next generation of engineers

Students get free access to world-class simulation software.

-

Connect with Ansys Now!

Design your future

Connect with Ansys to explore how simulation can power your next breakthrough.

Countries & Regions

Free Trials

Products & Services

Learn

About

Back

Products & Services

Back

Learn

Ansys empowers the next generation of engineers

Students get free access to world-class simulation software.

Back

About

Design your future

Connect with Ansys to explore how simulation can power your next breakthrough.

Free Trials

Ansys computational fluid dynamics simulation scales to 39 qubits with NVIDIA CUDA-Q on Gefion supercomputer.

Computational fluid dynamics (CFD) simulations have become indispensable across aerospace, automotive, energy, and process industries. As design cycles accelerate and fidelity requirements rise, the size and complexity of CFD models continue to expand, driving demands for ever-greater memory capacity, finer spatial resolution, and faster turnaround times. Ansys has long led efforts to address these challenges, pioneering high-performance solvers and integrating high-performance computing (HPC) and artificial intelligence (AI) to accelerate convergence and reduce computational cost without compromising accuracy.

The Ansys CTO Office is actively engaged in researching quantum algorithms to accelerate partial differential equations (PDEs), with an initial focus on CFD due to the availability of well-established benchmarks from the research community. We have adopted the NVIDIA CUDA-Q open-source quantum development platform to build our quantum applications stack, enabling scalable GPU-based algorithm simulations in a noiseless environment today and seamless execution on quantum hardware as we transition beyond the noisy intermediate-scale quantum (NISQ) era. Once we identify an algorithm that scales to industrial sizes and complexity, we will advance to hardware demonstrations as the next step in our research. This iterative process enables us to converge on the most effective methods for quantum readiness.

The NVIDIA CUDA-Q platform’s flexibility in developing hybrid quantum-classical workflows and GPU-accelerated quantum circuit simulations has played a key role in enabling us to study how the algorithms we explore will scale.

Quantum Computing Meets CFD

Quantum algorithms seek to carefully exploit novel ways that information can be processed when encoded in quantum systems. Quantum systems seem intuitively appealing for computationally demanding CFD simulations, particularly:

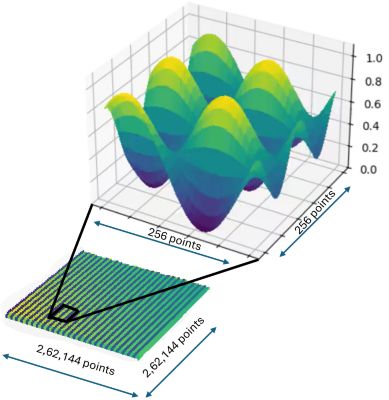

High-dimensionality: Unlike classical bits, quantum bits — known as qubits — can encode data that scales exponentially with their number. The addition of every qubit doubles the addressable data space. By representing an entire computational grid, potentially encompassing billions of points, into the amplitudes of a quantum state, quantum computing provides access to a vastly larger solution space. In fact, as demonstrated later in this blog, we solved a problem involving 68 billion grid points using just 39 qubits!

Parallel global updates: Quantum computing offers the possibility of iteratively updating data to evolve through timesteps commonly used in CFD as coherent global operations performed in a single circuit execution. This would allow simultaneous updating of all gridpoints rather than through iterative kernel launches.

However, successfully employing these properties for useful CFD applications is far more nuanced than the above intuition suggests. It requires intricately constructed algorithms to allow not only the manipulation, but also the readout of CFD information encoded in qubits. The Quantum Lattice Boltzmann Method (QLBM) is one such is one means of accomplishing these goals.

What Is the Quantum Lattice Boltzmann Method (QLBM)?

In CFD, the transport of a scalar density field is governed by the advection–diffusion equation, a canonical problem that is often an initial benchmark when developing classical numerical methods.

QLBM is a quantum-native implementation of the classical Lattice Boltzmann Method, adapted here to solve fluid simulation problems efficiently on quantum hardware. It is particularly well-suited for quantum CFD because of its inherently local and structured update rules, which can be naturally mapped to quantum circuits. QLBM retains the simplicity and modularity of LBM while unlocking the exponential data representation and processing power of quantum computing.

Each timestep in QLBM consists of four key operations:

- State Preparation: Initialize a "grid register" whose amplitudes encode the scalar density field over the discrete lattice.

- Collision: A linear combination of unitaries implements the collision operation. Ancilla qubits apart from the ones in the grid register are required for this step.

- Streaming: Perform controlled-shift operators to propagate amplitudes according to advection dynamics.

- Readout: Measure the quantum register to reconstruct the updated density distribution.

Together, these operations enable QLBM to perform a full timestep update across the entire lattice as a single, coherent quantum operation, eliminating the need for sequential point-wise updates typical in classical explicit time-marching schemes.

Record-Scale 39-Qubit Simulation

In collaboration with NVIDIA, Ansys deployed CUDA-Q on 183 nodes on the Gefion supercomputer at DCAI, successfully executing a 39-qubit QLBM simulation:

- 36 space qubits: Encoding a 218×218 2D grid — about 68 billion degrees of freedom.

- 3 ancilla qubits: Supporting collision and streaming logic.

- Platform: Algorithm code written in CUDA-Q enabled small-scale initial testing on local CPUs using the CUDA-Q “cpu” target. This was then easily scaled to an intermediate buildout using on-premise GPUs via the “nvidia” target. Finally, the same code was used to run a large-scale execution on Gefion, leveraging 183 nodes, totalling 1464 GPUs, simply by changing the CUDA-Q target to “mgpu”. In the near future, QPU runs will be feasible by using the same code being executed on various qubit modalities supported by CUDA-Q.

Large-Scale AI Optimized Infrastructure

The simulation was run on Gefion, an AI supercomputer operated by the Danish Centre for AI Innovation (DCAI), which has a mission to accelerate AI across domains by providing cutting-edge computing capabilities. Gefion is based on the NVIDIA DGX SuperPOD architecture and ranks 21st on the TOP500 list of the most powerful supercomputers in the world.

The advanced compute fabric in Gefion connects the servers to work as one, offering 3.2Tbit/s connection on each node, which has been instrumental in allowing the algorithm to build and manipulate large quantum state vectors. The nvidia-mgpu target of the CUDA-Q framework was used to generate statevectors by pooling the GPU VRAM across the nodes, abstracting memory management away from the scientists.

At peak execution, the simulation utilized 183 DGX nodes, each consisting of 8xH100, totalling 1464 GPUs, delivering approximately 85.7 PFLOPS (FP64 tensor) in the simulation. The compute interconnect consists of a high-speed, octo-rail NVIDIA Quantum-2 InfiniBand network with each GPU having directly attached 400Gbit/s connection to the compute fabric, moving tens of gigabytes of data between each GPU every second. The storage system uses an 800Gbit/s connection, achieving over 200GB/s IO500 bandwidth (563 GB/s “easy write” and 910GB/s “easy read”).

Gefion has been a perfect testbed for the parallelization of computations during the project, allowing smooth distribution of the analysis components across the cluster. The adaptive resource allocation model and the operations team of HPC experts allowed the project to scale to the maximum performance of the hardware seamlessly.

Uniform advection-diffusion of a 2D sinusoid, simulated using 39 qubits, on a 2,62,144 x 2,62,144 grid

By integrating Ansys’ deep expertise in solver development with pioneering strides in quantum algorithm research on NVIDIA’s high-performance CUDA-Q platform, we have established a robust foundation for quantum-accelerated fluid dynamics. As quantum computing techniques advance, this work represents a valuable progression and helps chart a systematic path toward industrial-scale quantum CFD development — addressing the ever-increasing computational demands of tomorrow’s engineering challenges.

For more information, read “Algorithmic Advances Towards a Realizable Quantum Lattice Boltzmann Method.”

The Advantage Blog

The Ansys Advantage blog, featuring contributions from Ansys and other technology experts, keeps you updated on how Ansys simulation is powering innovation that drives human advancement.